Last Updated on August 22, 2024 11:33 am by Laszlo Szabo / NowadAIs | Published on August 22, 2024 by Laszlo Szabo / NowadAIs

Microsoft Phi 3.5 Update: A New Benchmark in AI Performance – Key Notes

- Microsoft’s Phi 3.5 series includes three advanced models: mini-instruct, MoE-instruct, and vision-instruct.

- Phi-3.5-mini-instruct excels in reasoning tasks within memory-constrained environments, with multilingual capabilities.

- Phi-3.5-MoE-instruct utilizes a “Mixture of Experts” architecture, balancing efficiency and task-specific performance.

- Phi-3.5-vision-instruct integrates multimodal analysis, handling complex text and image processing tasks with ease.

Phi 3.5 Updated by Microsft

Microsoft has once again etched its name as a trailblazer with the release of its Phi 3.5 series. This remarkable lineup, comprising the Phi-3.5-mini-instruct, Phi-3.5-MoE-instruct, and Phi-3.5-vision-instruct models, has sent shockwaves through the AI community, showcasing unprecedented performance and multifaceted capabilities. Designed to cater to a diverse range of applications, from resource-constrained environments to complex reasoning tasks and multimodal analysis, these models are change of what is achievable with state-of-the-art AI technology.

Phi-3.5-mini-instruct: Compact Yet Formidable

The Phi-3.5-mini-instruct model is a true testament to Microsoft’s commitment to pushing the boundaries of AI efficiency. Boasting a mere 3.8 billion parameters, this lightweight powerhouse defies conventional wisdom by outperforming larger models from industry giants like Meta and Google in a multitude of benchmarks. Its prowess lies in its ability to deliver exceptional reasoning capabilities, making it an ideal choice for scenarios that demand robust logic-based reasoning, code generation, and mathematical problem-solving, all while operating within memory and compute-constrained environments.

One of the standout features of the Phi-3.5-mini-instruct is its remarkable multilingual proficiency. Through rigorous training on a diverse corpus of data spanning multiple languages, this model has achieved near-state-of-the-art performance in multilingual and multi-turn conversational tasks. Whether engaging in dialogue or tackling complex linguistic challenges, the Phi-3.5-mini-instruct seamlessly adapts to various linguistic landscapes, ensuring consistent and reliable performance across a wide range of languages.

Benchmarking Excellence

To illustrate the prowess of the Phi-3.5-mini-instruct, let’s delve into its performance on several industry-recognized benchmarks:

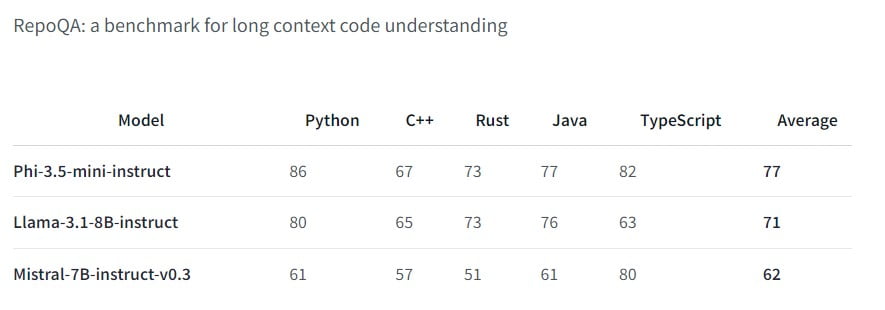

- RepoQA: Designed to evaluate long-context code understanding, the Phi-3.5-mini-instruct outperformed its larger counterparts, including Llama-3.1-8B-instruct and Mistral-7B-instruct, showcasing its exceptional aptitude in comprehending and reasoning about complex codebases.

- Multilingual MMLU: On this benchmark, which assesses multilingual language understanding across various domains and expertise levels, the Phi-3.5-mini-instruct achieved a remarkable score of 55.4%, surpassing the performance of models like Mistral-7B-Instruct-v0.3 and Llama-3.1-8B-Ins.

- Long Context Benchmarks: In tasks that demand the processing of extensive context, such as GovReport, QMSum, and SummScreenFD, the Phi-3.5-mini-instruct demonstrated its ability to maintain coherence and accuracy, outperforming larger models like Gemini-1.5-Flash and GPT-4o-mini-2024-07-18 (Chat).

These benchmark results underscore the Phi-3.5-mini-instruct’s exceptional capabilities, showcasing its ability to punch well above its weight class and deliver unparalleled performance in a wide range of tasks.

Phi-3.5-MoE-instruct: A Mixture of Expertise

The Phi-3.5-MoE-instruct model represents a groundbreaking approach to AI architecture, leveraging the concept of a “Mixture of Experts” (MoE). This innovative design combines multiple specialized models, each excelling in specific tasks, into a single, cohesive framework. With a staggering 42 billion total parameters, yet only 6.6 billion active during generation, the Phi-3.5-MoE-instruct strikes a remarkable balance between computational efficiency and performance.

Dynamic Task Adaptation

One of the key advantages of the Phi-3.5-MoE-instruct’s architecture is its ability to dynamically switch between different “experts” based on the task at hand. This intelligent allocation of resources ensures that the most relevant and specialized model is engaged for each specific task, resulting in unparalleled accuracy and efficiency. Whether it’s tackling complex coding challenges, solving intricate mathematical problems, or navigating the nuances of multilingual language understanding, the Phi-3.5-MoE-instruct seamlessly adapts, leveraging the collective expertise of its constituent models.

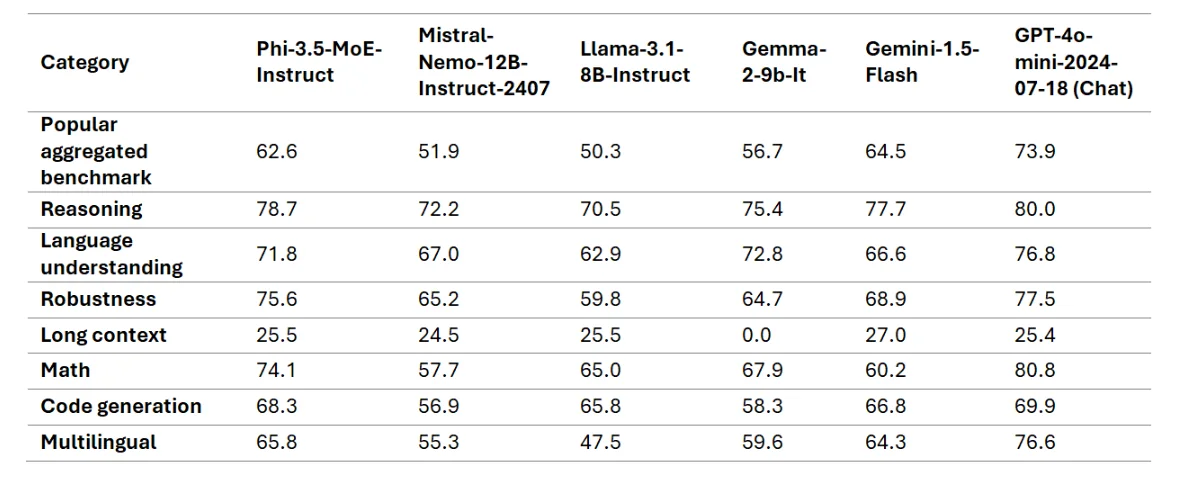

Benchmark Dominance

The Phi-3.5-MoE-instruct’s performance on industry benchmarks is nothing short of remarkable. On the widely acclaimed MMLU (Massive Multitask Language Understanding) benchmark, which evaluates models across a diverse range of subjects and expertise levels, the Phi-3.5-MoE-instruct outperformed OpenAI’s GPT-4o-mini in the 5-shot scenario. This achievement underscores the model’s exceptional reasoning abilities and its capacity to tackle complex, multifaceted tasks with unparalleled proficiency.

Moreover, the Phi-3.5-MoE-instruct’s prowess extends beyond language understanding. In the realm of code generation, as assessed by benchmarks like HumanEval and MBPP, the model consistently outperformed its competitors, showcasing its ability to generate accurate and efficient code across various programming languages and domains.

Phi-3.5-vision-instruct: Unlocking Multimodal Possibilities

In today’s data-rich landscape, where information is often presented in various formats, including text, images, and videos, the need for AI models capable of seamlessly integrating and comprehending these diverse modalities has never been more pressing. Enter the Phi-3.5-vision-instruct, Microsoft’s cutting-edge multimodal model that seamlessly blends text and image processing capabilities into a single, powerful framework.

With 4.2 billion parameters and a specialized architecture that includes an image encoder, connector, projector, and the Phi-3-Mini language model, the Phi-3.5-vision-instruct is uniquely equipped to tackle a wide range of multimodal tasks. From general image understanding and optical character recognition to chart and table comprehension, and even video summarization, this model is poised to revolutionize the way we interact with and extract insights from diverse data sources.

Multimodal Mastery

One of the standout features of the Phi-3.5-vision-instruct is its ability to handle complex, multi-frame visual tasks with ease. Whether it’s comparing images from different time points or summarizing a sequence of frames, the model’s extensive 128,000 token context length enables it to maintain coherence and accuracy throughout the entire process. This capability places the Phi-3.5-vision-instruct on par with much larger competitors, such as GPT-4o, while offering a more efficient and resource-friendly solution.

Benchmark Excellence

The Phi-3.5-vision-instruct’s performance on industry benchmarks is a testament to its multimodal prowess. In tasks that traditionally require much larger models, the Phi-3.5-vision-instruct consistently outperforms its competitors, delivering state-of-the-art performance in image-heavy scenarios. Whether it’s optical character recognition, chart comprehension, or video summarization, this model consistently demonstrates its ability to extract insights and generate accurate and meaningful outputs from multimodal data sources.

Training Regimen: A Pursuit of Excellence

The exceptional performance of the Phi 3.5 series can be attributed, in part, to the rigorous training regimen employed by Microsoft’s AI experts. Each model underwent a meticulous training process, leveraging state-of-the-art techniques and vast computational resources to ensure optimal performance and robustness.

- Phi-3.5-mini-instruct: Trained on 3.4 trillion tokens using 512 H100-80G GPUs over a period of 10 days, the Phi-3.5-mini-instruct model was exposed to a diverse corpus of data, enabling it to develop a deep understanding of various domains and languages.

- Phi-3.5-MoE-instruct: The training process for the Phi-3.5-MoE-instruct was even more extensive, spanning 23 days and utilizing 512 H100-80G GPUs to train on 4.9 trillion tokens. This extensive training regimen allowed the model to develop and refine its specialized “experts,” ensuring optimal performance across a wide range of tasks.

- Phi-3.5-vision-instruct: To equip the Phi-3.5-vision-instruct with its multimodal capabilities, Microsoft employed 256 A100-80G GPUs to train the model on 500 billion vision and text tokens over a period of 6 days. This rigorous training process enabled the model to develop a deep understanding of the intricate relationships between text and visual data, laying the foundation for its exceptional multimodal performance.

Throughout the training process, Microsoft’s AI experts employed a combination of supervised fine-tuning, proximal policy optimization, and direct preference optimization techniques. These advanced methods ensured precise instruction adherence and robust safety measures, resulting in models that not only deliver exceptional performance but also adhere to the highest standards of reliability and trustworthiness.

Open-Source Collaboration: Empowering the AI Community

In a move that has garnered widespread praise from the AI community, Microsoft has released all three Phi 3.5 models under the permissive, open-source MIT license. This decision reflects the company’s commitment to fostering innovation and collaboration within the AI ecosystem, enabling developers and researchers from around the world to freely access, modify, and commercialize these cutting-edge models.

By embracing an open-source approach, Microsoft is not only democratizing access to state-of-the-art AI technology but also encouraging a collaborative effort to further advance the field. Developers and researchers can now build upon the foundation laid by the Phi 3.5 series, contributing their own insights and innovations to push the boundaries of what is achievable with these models.

Fostering Innovation and Accessibility

The open-source nature of the Phi 3.5 models has the potential to catalyze innovation across a wide range of industries and applications. From agriculture and manufacturing to healthcare and finance, the accessibility of these models empowers organizations of all sizes to integrate cutting-edge AI capabilities into their products and services, driving efficiency, productivity, and innovation.

Moreover, the open-source approach aligns with Microsoft’s commitment to making AI technology more accessible and inclusive. By removing barriers to entry and fostering a collaborative ecosystem, the Phi 3.5 series has the potential to democratize access to advanced AI capabilities, enabling developers and researchers from diverse backgrounds and regions to contribute to the field’s advancement.

Responsible AI: Prioritizing Ethics and Safety

While the Phi 3.5 series represents a significant technological advancement, Microsoft recognizes the importance of responsible AI development and deployment. As such, the company has implemented rigorous safety measures and ethical considerations to ensure that these models are used in a manner that is fair, reliable, and aligned with societal values.

Comprehensive Safety Evaluation

Prior to their release, the Phi 3.5 models underwent extensive safety evaluations, including red teaming, adversarial conversation simulations, and multilingual safety evaluation benchmark datasets. These evaluations aimed to assess the models’ propensity to produce undesirable outputs across multiple languages and risk categories, ensuring that appropriate safeguards were in place.

One of the key findings from these evaluations was the positive impact of safety post-training techniques, as detailed in the Phi-3 Safety Post-Training paper. The models demonstrated improved refusal rates for generating undesirable outputs and increased robustness against jailbreak techniques, even in non-English languages.

Addressing Potential Limitations

Despite these safety measures, Microsoft acknowledges that the Phi 3.5 models, like any language model, may still exhibit certain limitations and biases. These include the potential for factual inaccuracies, particularly in tasks that require extensive factual knowledge, as well as the possibility of perpetuating stereotypes or representing certain groups disproportionately.

To mitigate these risks, Microsoft encourages developers to follow responsible AI best practices, including mapping, measuring, and mitigating risks associated with their specific use case and cultural, linguistic context. The company also recommends fine-tuning the models for specific use cases and leveraging them as part of broader AI systems with language-specific safeguards in place.

Ethical Considerations

Beyond technical safeguards, Microsoft emphasizes the importance of ethical considerations in the development and deployment of AI models. This includes assessing the suitability of using the Phi 3.5 models in high-risk scenarios where unfair, unreliable, or offensive outputs could lead to harm, such as providing advice in sensitive or expert domains like legal or health advice.

Additionally, Microsoft encourages developers to follow transparency best practices, informing end-users that they are interacting with an AI system and implementing feedback mechanisms to ground responses in use-case-specific, contextual information.

Conclusion: A Paradigm Shift in AI Capabilities

The release of the Phi 3.5 series by Microsoft represents a paradigm shift in the capabilities of AI models. By combining cutting-edge performance, efficiency, and versatility, these models are poised to revolutionize the way we approach AI-powered solutions across a wide range of industries and applications.

From the compact yet formidable Phi-3.5-mini-instruct, capable of delivering exceptional reasoning capabilities in resource-constrained environments, to the innovative Phi-3.5-MoE-instruct, with its dynamic task adaptation and unparalleled efficiency, and the multimodal prowess of the Phi-3.5-vision-instruct, Microsoft has demonstrated its commitment to pushing the boundaries of what is achievable with AI technology.

Descriptions

- Phi-3.5-mini-instruct: A compact AI model with 3.8 billion parameters designed for high-efficiency reasoning and code generation, particularly in environments with limited computational resources.

- Mixture of Experts (MoE): An AI architecture where multiple specialized models (experts) are combined, each handling specific tasks. Only the relevant expert is activated during a given task, optimizing resource use.

- Multimodal Model: An AI model capable of processing and integrating multiple types of data, such as text and images, to generate insights or perform tasks. Phi-3.5-vision-instruct is an example, excelling in tasks requiring the interpretation of both visual and textual data.

- Benchmarking: The process of testing and comparing a model’s performance against established standards or tasks. Phi 3.5 models were tested on tasks like RepoQA for code understanding and MMLU for language understanding.

- Red Teaming: A safety evaluation method where teams try to exploit weaknesses in AI models, aiming to identify and fix vulnerabilities before deployment. Microsoft used red teaming in the safety assessments of Phi 3.5 models.

- Open-Source MIT License: A permissive license that allows users to freely use, modify, and distribute software or models. Microsoft released the Phi 3.5 series under this license to encourage community collaboration and innovation.

Frequently Asked Questions

- What is the Microsoft Phi 3.5 Update?

The Microsoft Phi 3.5 update includes a series of AI models—mini-instruct, MoE-instruct, and vision-instruct—designed to excel in various tasks, from reasoning in constrained environments to handling complex multimodal data. - How does Phi-3.5-mini-instruct differ from other AI models?

Phi-3.5-mini-instruct is a compact model with just 3.8 billion parameters but delivers high performance in logic-based reasoning and multilingual tasks, even outperforming larger models from competitors. - What is the Mixture of Experts (MoE) model in Microsoft Phi 3.5?

The Phi-3.5-MoE-instruct uses a “Mixture of Experts” architecture, where different specialized models handle specific tasks. This dynamic task adaptation ensures high efficiency and accuracy across various domains. - What capabilities does Phi-3.5-vision-instruct offer?

Phi-3.5-vision-instruct is a multimodal AI model that integrates text and image data, excelling in tasks like optical character recognition and video summarization, offering robust performance in diverse applications. - Why did Microsoft release Phi 3.5 models as open-source?

Microsoft released the Phi 3.5 models under an open-source MIT license to encourage global collaboration and innovation, allowing developers and researchers to build upon and improve these AI models.