Last Updated on November 4, 2024 1:14 pm by Laszlo Szabo / NowadAIs | Published on November 4, 2024 by Laszlo Szabo / NowadAIs

Meet LongVu: The AI That’s Cracking the Code of Long Videos – Key Notes:

- LongVu processes multiple video elements simultaneously (visuals, audio, text) to understand content like a human would

- The system can handle lengthy videos efficiently, making it useful for content moderation and education

- Unlike previous tools, LongVu maintains context throughout long videos, similar to how people follow movie plots

LongVu: The AI Video Assistant That Never Gets Bored

Ever watched a two-hour video and wished you could instantly know what’s important without watching the whole thing? Or maybe you’ve wondered how YouTube manages to catch inappropriate content in the millions of hours of video uploaded every day? Well, Meta AI might have just solved these problems with their newest creation: LongVu.

What’s the Big Deal About LongVu?

Imagine having a super-smart friend who can watch hours of video content and tell you exactly what’s happening, when it happened, and why it matters. That’s LongVu in a nutshell. It’s like having a master chef in your kitchen who can taste, smell, and see all the ingredients coming together to create the perfect dish – except instead of ingredients, LongVu processes words, sounds, and visuals from videos.

Think about how you understand a movie: you don’t just watch the pictures – you listen to the dialogue, read facial expressions, and follow the story as it unfolds. LongVu does the same thing, but it can do it faster and across longer videos than any human could manage.

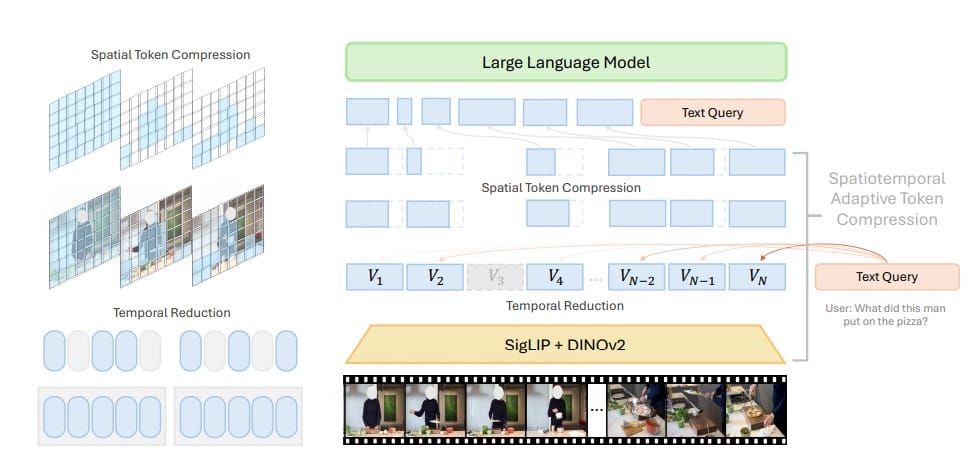

The Secret Sauce: How Does It Work?

LongVu is like a master juggler who can keep multiple balls in the air at once. These “balls” are:

- The visual story (what you see)

- The soundtrack (what you hear)

- The words being spoken

- The way everything connects over time

But here’s where it gets really cool: LongVu doesn’t just juggle these elements – it weaves them together into a complete understanding of what’s happening. It’s like having subtitles, director’s commentary, and a film critic’s analysis all rolled into one.

What Can We Actually Do With This?

Let’s get practical. Here’s where LongVu could make your life easier:

Finding That One Scene You Love: Remember frantically scrubbing through a video trying to find that one perfect moment? LongVu could help you jump right to it.

Safer Online Spaces: It can spot inappropriate content before it reaches viewers, making platforms safer for everyone.

Education Revolution: Imagine having a smart study buddy that can summarize a three-hour lecture into the key points you need to know.

The Behind-the-Scenes Magic

While we won’t get too technical here, it’s worth noting that LongVu learned its skills by watching countless videos – everything from Hollywood blockbusters to your cousin’s wedding video (okay, maybe not that specific one, but you get the idea). It’s like it went to a massive film school where it learned to understand every type of video content imaginable.

Why This Matters for Everyone

You might be thinking, “Cool tech, but why should I care?” Well, consider this: How much time do you spend watching videos online? Whether it’s for work, education, or entertainment, video is everywhere. LongVu could help you:

- Find exactly what you’re looking for in long videos

- Get better recommendations based on actual video content

- Access videos more easily if you have visual or hearing impairments

- Save time by getting quick summaries of lengthy content

The Road Ahead

As impressive as LongVu is, it’s just the beginning. Think of it as the first smartphone – change a lot for its time, but just a hint of what’s to come. The future might bring us AI that can create custom video summaries based on your interests, or even help filmmakers edit their movies.

The Human Touch

Of course, with great power comes great responsibility (thanks, Spider-Man). Meta AI knows this, which is why they’re thinking carefully about privacy and ethical concerns. After all, we want AI that helps us understand videos better, not one that intrudes on our personal lives.

The Bottom Line

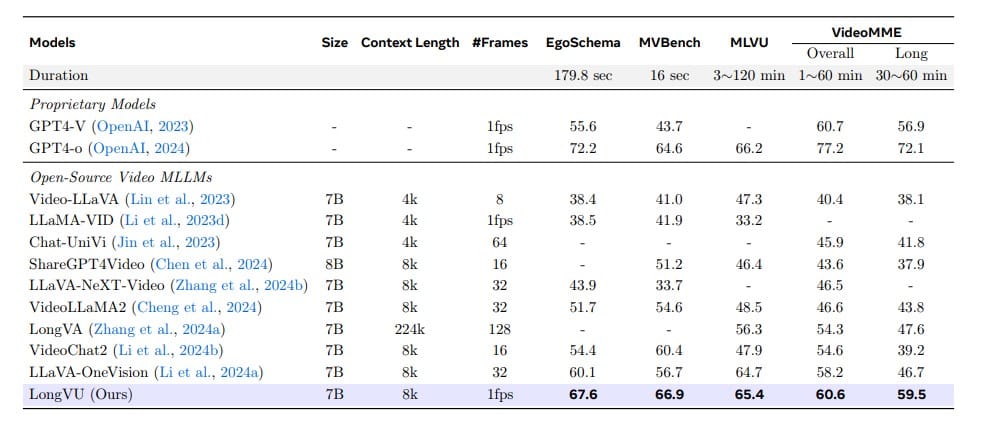

LongVu represents a huge leap forward in how machines understand videos. It’s like we’ve upgraded from a flip phone to a smartphone in the world of video AI. While it might sound like science fiction, this technology is very real and could soon be making your life easier in ways you never imagined.

Whether you’re a content creator, a student, or just someone who loves watching videos online, LongVu’s abilities could change how you interact with video content. And who knows? Maybe someday soon, you’ll be able to ask your device to find that hilarious cat moment in your three-hour video compilation – and it’ll take you right there in seconds.

Descriptions:

- Multimodal Processing: When something can handle different types of information at once (like seeing, hearing, and reading) – just like how you use multiple senses to experience the world

- Content Moderation: The process of reviewing online content to remove inappropriate material before it reaches viewers

- Temporal Reasoning: Understanding how events relate to each other over time – like knowing that in a cooking video, you crack the eggs before making the omelet

- Real-time Processing: Analyzing information as it happens, not after the fact – like a sports commentator describing a game as it unfolds

Frequently Asked Questions:

What makes LongVu different from other video AI systems? LongVu stands out because it processes videos more like a human would. It doesn’t just look at individual frames or listen to isolated sounds – it combines everything it sees and hears into one coherent understanding. Think of how you watch a movie: you’re processing the actor’s expressions, their words, the background music, and the overall story all at once. LongVu does this too, but it can handle much longer videos without getting tired or losing track of what’s happening. This makes it especially good at tasks like finding specific moments in long videos or summarizing lengthy content.

How can LongVu help everyday users? LongVu can assist users in several practical ways. For students, it can take a long lecture and pull out the key points you need to know, saving hours of study time. If you’re looking for a specific moment in a long video, LongVu can help you find it without watching the entire thing. Content creators can use it to better understand how their videos are being received and what parts are most engaging. The system can also help make videos more accessible by providing better descriptions and summaries for people with visual or hearing impairments.

What kind of videos can LongVu analyze? LongVu has been trained on an extensive variety of video content. Its training included everything from professional films and documentaries to user-generated content from social media. The system can handle educational lectures, entertainment content, social media posts, and professional productions. LongVu understands different video styles and formats, making it versatile enough to work with nearly any type of video content. This broad training helps it understand context and nuance across different types of videos.

Is LongVu safe to use with private videos? Meta has built LongVu with privacy considerations at its core. The system follows strict data protection guidelines and doesn’t store personal video content. Privacy safeguards are built into how LongVu processes and analyzes videos. The technology focuses on understanding video content while respecting user privacy, similar to how a human assistant would maintain confidentiality. Meta continues to update and improve these privacy protections as the technology develops.

What future improvements can we expect from LongVu? The current version of LongVu represents just the beginning of what’s possible with video AI. Future versions might offer more personalized video summaries based on what you’re interested in. The technology could expand to help with video editing, better content recommendations, and more sophisticated search capabilities. Meta is working on making the system even better at understanding context and nuance in videos. These improvements could lead to new applications we haven’t even thought of yet.