Last Updated on July 30, 2024 1:06 pm by Laszlo Szabo / NowadAIs | Published on July 30, 2024 by Laszlo Szabo / NowadAIs

Power of Llama 3.1: Meta’s Latest Open-Source AI Model – Key Notes

- Meta’s Llama 3.1 offers three models: 8B, 70B, and 405B parameters.

- Llama 3.1 enhances AI capabilities with a 128K token context window and advanced multilingual support.

- Open-source availability under Apache 2.0 license.

- Significant improvements in training stability, data quality, and inference optimization.

Introduction – Meet the Power of Meta’s Llama 3.1 LLM Model

The release of Llama 3.1 by Meta Platforms has undoubtedly sent shockwaves through the industry. As the latest iteration of Meta’s open-source large language model, Llama 3.1 promises to redefine the boundaries of what is possible with AI technology. Now we deep into the capabilities, architecture, and ecosystem surrounding this remarkable model, exploring how it is poised to drive innovation and empower developers worldwide.

The Llama 3.1 Family: Unparalleled Capabilities Across the Spectrum

Meta’s Llama 3.1 is available in three distinct variants – the 8B, 70B, and the flagship 405B parameter models. Each of these versions boasts its own unique strengths, catering to a diverse range of use cases and requirements.

Llama 3.1 8B: A Versatile Workhorse

The 8B model, while the smallest in the Llama 3.1 lineup, is no slouch when it comes to performance. With its impressive capabilities in areas like general knowledge, math, and coding, the 8B variant is an ideal choice for developers seeking a lightweight, yet highly capable, AI assistant. Its fast inference capabilities and low memory footprint make it a perfect fit for deployment on a wide range of platforms, from edge devices to cloud-based applications.

Llama 3.1 70B: Balancing Power and Efficiency

The 70B model strikes a remarkable balance between raw power and cost-effectiveness. This variant excels in tasks that require more advanced reasoning, multilingual proficiency, and robust tool utilization. With its significantly longer context length of 128K and state-of-the-art capabilities, the 70B model is well-suited for complex use cases such as long-form text summarization, multilingual conversational agents, and sophisticated coding assistants.

Llama 3.1 405B: The Flagship Powerhouse

The crown jewel of the Llama 3.1 family is the 405B parameter model. This behemoth is the first openly available model that can rival the top AI models in terms of general knowledge, steerability, math, tool use, and multilingual translation. Its unparalleled capabilities make it the go-to choice for developers seeking to push the boundaries of what is possible with generative AI. From synthetic data generation to model distillation, the 405B model unlocks a world of possibilities for the open-source community.

Architectural Innovations: Scaling Llama to New Heights

Developing a model of the scale and complexity of Llama 3.1 405B was no easy feat. Meta’s team of AI researchers and engineers overcame numerous challenges to create a truly amazing architecture.

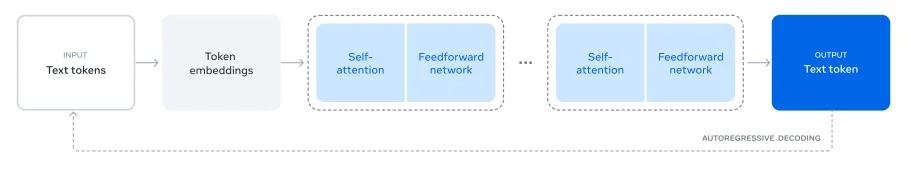

Optimizing for Training at Scale

To enable the training of the 405B model on over 15 trillion tokens, Meta made several key design choices. They opted for a standard decoder-only transformer model architecture, which prioritized training stability over more complex approaches. Additionally, they implemented an iterative post-training procedure, leveraging supervised fine-tuning and direct preference optimization to create high-quality synthetic data and improve the model’s capabilities.

Enhancing Data Quality and Quantity

Recognizing the importance of data in model performance, Meta invested heavily in improving both the quantity and quality of the data used for pre- and post-training. This included developing more rigorous pre-processing and curation pipelines, as well as implementing advanced quality assurance and filtering techniques.

Optimizing for Large-Scale Inference

To support the efficient inference of the massive 405B model, Meta quantized the model from 16-bit (BF16) to 8-bit (FP8) numerics. This optimization significantly reduced the compute requirements, allowing the model to run within a single server node without sacrificing performance.

Llama in Action: Instruction-Following and Chat Capabilities

One of the key focus areas for the Llama 3.1 development team was improving the model’s helpfulness, quality, and detailed instruction-following capabilities in response to user prompts. This was a significant challenge, especially when coupled with the increased model size and extended 128K context window.

Enhancing Instruction-Following

Meta’s approach to improving instruction-following involved several rounds of Supervised Fine-Tuning (SFT), Rejection Sampling (RS), and Direct Preference Optimization (DPO). By leveraging synthetic data generation and rigorous data processing techniques, the team was able to scale the amount of fine-tuning data across capabilities, ensuring high quality and safety across all tasks.

Strengthening Conversational Abilities

In addition to instruction-following, Meta also focused on enhancing the Llama 3.1 models’ chat capabilities. Through a combination of SFT, RS, and DPO, the team developed final chat models that maintain high levels of helpfulness, quality, and safety, even as the models grew in size and complexity.

The Llama Ecosystem: Unlocking New Possibilities

Meta’s vision for Llama 3.1 extends beyond the models themselves, encompassing a broader system that empowers developers to create custom offerings and unlock new workflows.

The Llama System: Orchestrating Components

Llama models were designed to work as part of a larger system, incorporating external tools and components. This “Llama System” vision includes the release of a full reference system, complete with sample applications and new components like Llama Guard 3 (a multilingual safety model) and Prompt Guard (a prompt injection filter).

Defining the Llama Stack

To support the growth of the Llama ecosystem, Meta has introduced the “Llama Stack” – a set of standardized and opinionated interfaces for building canonical toolchain components (fine-tuning, synthetic data generation) and agentic applications. The goal is to facilitate easier interoperability and adoption across the open-source community.

Empowering the Developer Community

By making the Llama model weights openly available for download, Meta has empowered developers to fully customize the models for their unique needs and applications. This includes the ability to train on new datasets, conduct additional fine-tuning, and run the models in any environment – all without the need to share data with Meta.

Benchmarking Llama 3.1: Competitive Across the Board

Meta’s commitment to rigorous evaluation and benchmarking of the Llama 3.1 models is a testament to their confidence in the capabilities of these AI systems.

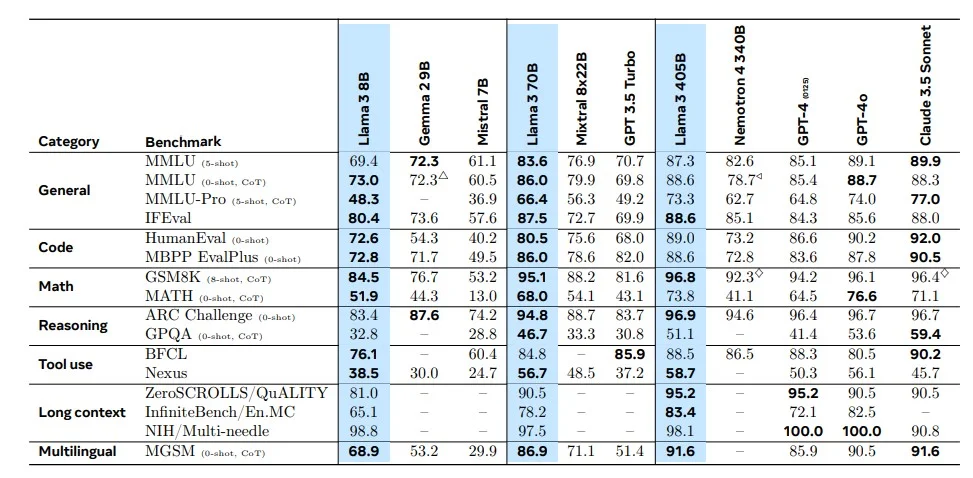

Extensive Evaluations Across Domains

For this release, Meta evaluated the performance of Llama 3.1 on over 150 benchmark datasets spanning a wide range of languages and tasks. Additionally, they conducted extensive human evaluations, comparing the models’ performance against leading foundation models like GPT-4, GPT-4o, and Claude 3.5 Sonnet in real-world scenarios.

Competitive Performance Across the Board

The results of these evaluations are nothing short of impressive. Meta’s experimental data suggests that the Llama 3.1 flagship model is highly competitive with the top AI models across a diverse range of tasks, including general knowledge, math, reasoning, and multilingual capabilities. Even the smaller 8B and 70B variants have demonstrated their ability to hold their own against closed and open-source models of similar size.

Pricing and Deployment Options: Maximizing Value and Accessibility

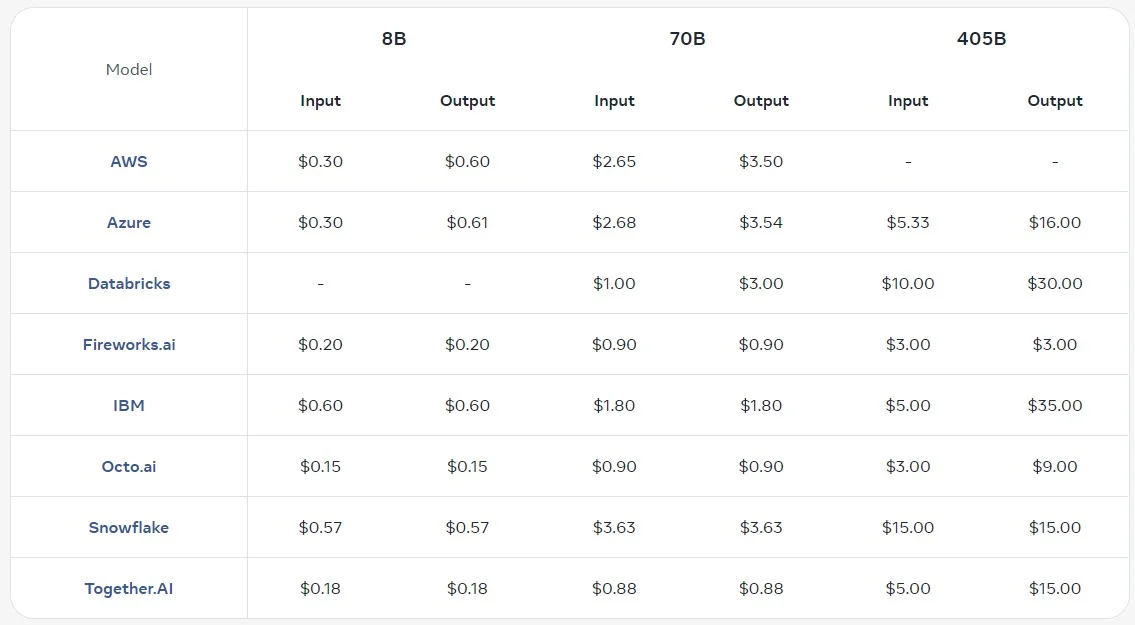

As developers and organizations explore the potential of Llama 3.1, the question of pricing and deployment options becomes crucial. Meta has worked closely with its partners to ensure that Llama 3.1 is both cost-effective and widely accessible.

Competitive Pricing Across Providers

Meta has released detailed pricing information for hosted Llama 3.1 inference API services, showcasing the competitive landscape across various cloud providers and platform partners. This transparency allows developers to make informed decisions and optimize their deployment strategies based on their specific needs and budgets.

Flexible Deployment Options

In addition to the hosted inference services, Llama 3.1 models can also be downloaded and deployed locally, for free, giving developers the freedom to run the models in their preferred environments.

“True to our commitment to open source, starting today, we’re making these models available to the community for download on llama.meta.com and Hugging Face and available for immediate development on our broad ecosystem of partner platforms.”

Meta stated. This flexibility, combined with the open-source nature of the models, empowers the community to explore and innovate without being constrained by centralized infrastructure or data-sharing requirements.

The Impact of Llama 3.1: Transforming the AI Landscape

The release of Llama 3.1 is poised to have a profound and far-reaching impact on the AI industry and beyond. By making these powerful models openly available, Meta is paving the way for a new era of innovation and democratization of AI technology.

Driving Open-Source Advancements

The open-source nature of Llama 3.1 allows developers and researchers to fully customize and extend the models, unlocking new use cases and pushing the boundaries of what is possible with generative AI. This collaborative approach fosters a culture of innovation and rapid progress, benefiting the entire AI community.

Democratizing AI Capabilities

By removing barriers to access and empowering developers worldwide, Llama 3.1 democratizes the power of AI. This aligns with Meta’s vision of ensuring that the benefits and opportunities of AI technology are distributed more evenly across society, rather than being concentrated in the hands of a few.

Fostering Responsible AI Development

Alongside the technical advancements, Meta has also placed a strong emphasis on responsible AI development. The Llama System incorporates safety measures like Llama Guard 3 and Prompt Guard, demonstrating a commitment to building AI systems that are not only capable, but also ethical and trustworthy.

The Future of Llama: Endless Possibilities

As impressive as Llama 3.1 may be, Meta’s vision for the future of this AI model is even more ambitious. The company is already exploring new frontiers, paving the way for even greater advancements in the years to come.

Expanding Model Capabilities

While the current Llama 3.1 models already excel across a wide range of tasks, Meta is committed to further expanding their capabilities. This includes exploring more device-friendly model sizes, incorporating additional modalities, and investing heavily in the agent platform layer to enable even more sophisticated and agentic behaviors.

Driving Ecosystem Growth

The Llama ecosystem is poised for exponential growth, with Meta actively collaborating with a diverse array of partners to build out the supporting infrastructure, tooling, and services. By fostering this collaborative environment, the company aims to lower the barriers to entry and empower developers to create innovative applications that harness the full potential of Llama.

Cementing Open-Source Leadership

Through the continued development and refinement of Llama, Meta is solidifying its position as a leader in the open-source AI space. By setting new benchmarks for performance, scalability, and responsible development, the company is paving the way for a future where open-source AI models become the industry standard, driving widespread innovation and accessibility.

Conclusion: Embracing the Llama Revolution

The release of Llama 3.1 marks a pivotal moment in the evolution of artificial intelligence. By making this model openly available, Meta has empowered developers, researchers, and innovators worldwide to push the boundaries of what is possible with generative AI. From synthetic data generation to model distillation, the Llama 3.1 collection offers unparalleled capabilities that are poised to transform industries and unlock new frontiers of human-machine collaboration.

As the Llama ecosystem continues to grow and evolve, the impact of this open-source revolution will only become more profound. By fostering a collaborative, transparent, and responsible approach to AI development, Meta is paving the way for a future where the benefits of advanced AI technology are accessible to all.

Embrace and try the Llama revolution – or read their fully detailed Research Paper!

Descriptions

LLM Hallucinating: Instances where large language models generate incorrect or fabricated information.

HaluBench: A benchmark dataset used to evaluate AI models’ accuracy in detecting hallucinations.

PubMedQA Dataset: A dataset designed to evaluate AI models in the domain of medical question-answering.

FSDP Machine Learning Technique: Fully Sharded Data Parallelism, a technique used to improve the efficiency and scalability of training large language models by distributing data and computations across multiple GPUs.

Context Window: The amount of text that an AI model can process in a single session. Llama 3.1’s context window is 128K tokens, allowing for longer and more detailed conversations.

Quantization: The process of reducing the number of bits that represent data, in this case, converting model numerics from 16-bit to 8-bit to optimize performance without sacrificing quality.

Supervised Fine-Tuning (SFT): A training method where a model is fine-tuned on a specific task using labeled data to improve its performance.

Direct Preference Optimization (DPO): A technique that involves directly optimizing the model based on user preferences to enhance its output quality.

Prompt Injection Filter: A safety feature that prevents malicious prompts from affecting the model’s output.

Llama Stack: A set of standardized interfaces for building and deploying AI applications using Llama models.

Frequently Asked Questions

1. What is Meta’s Llama 3.1? Meta’s Llama 3.1 is the latest iteration of Meta’s open-source large language model, designed to push the boundaries of AI technology with advanced capabilities in general knowledge, reasoning, and multilingual support.

2. How does the 405B parameter model of Llama 3.1 compare to other models? The 405B parameter model is the flagship of the Llama 3.1 family, offering unmatched capabilities in general knowledge, steerability, and tool use. It rivals top AI models in performance and is designed for the most demanding applications.

3. What improvements does Llama 3.1 offer over its predecessors? Llama 3.1 features a significantly longer context window (128K tokens), enhanced training stability, and improved data quality. These improvements result in better performance, especially in complex tasks requiring advanced reasoning and multilingual capabilities.

4. How does Meta ensure the ethical use of Llama 3.1? Meta has integrated safety measures such as Llama Guard 3 and Prompt Guard into the Llama System. These features help prevent misuse and ensure that the AI operates within ethical boundaries, providing trustworthy and accurate information.

5. How can developers access and use Llama 3.1? Llama 3.1 is available for download on Meta’s website and Hugging Face under the Apache 2.0 license. Developers can customize the models for their unique needs, train on new datasets, and deploy them in various environments without sharing data with Meta.