Last Updated on July 10, 2024 10:56 am by Laszlo Szabo / NowadAIs | Published on July 10, 2024 by Laszlo Szabo / NowadAIs

LivePortrait: From Static Images to Dynamic Portrait Animations with AI – Key Notes

- LivePortrait is an AI-driven portrait animation framework.

- Developed by Kuaishou Technology, University of Science and Technology of China, and Fudan University.

- Uses an implicit-keypoint-based framework for efficiency and control.

- Capable of generating animations at 12.8 milliseconds per frame on RTX 4090 GPU.

- Trained on 69 million high-quality frames for improved generalization.

- Features stitching and retargeting modules for enhanced control.

- Can animate animal portraits as well as human portraits.

- Focuses on ethical considerations to prevent misuse in deep fakes.

LivePortrait is Available – Introduction

Portrait animation has long been a captivating and challenging domain within the realm of digital content creation. Traditionally, animating a static image to bring it to life required painstaking manual efforts, complex software, and significant time investment. However, the recent advancements in artificial intelligence (AI) have make this much easier, controllable, and high-quality portrait animation solutions.

Enter LivePortrait, a new AI-driven portrait animation framework developed by a collaborative team of researchers from Kuaishou Technology, the University of Science and Technology of China, and Fudan University. This innovative tool harnesses the power of AI to transform static portraits into lifelike, dynamic visuals, offering a transformative solution for content creators, digital artists, and various practical applications.

Implicit-Keypoint-Based Framework: Balancing Efficiency and Controllability

At the core of LivePortrait’s approach is its reliance on an implicit-keypoint-based framework, a departure from the mainstream diffusion-based methods. This strategic choice allows the model to strike a delicate balance between computational efficiency and granular controllability, making it a practical and versatile solution for real-world scenarios.

Unlike traditional diffusion-based techniques that can be computationally intensive, LivePortrait’s implicit-keypoint-based framework ensures swift generation speeds, with the model capable of producing animations at an impressive 12.8 milliseconds per frame on an RTX 4090 GPU. This efficiency is a crucial factor, particularly in applications where real-time performance is paramount, such as video conferencing, social media, and interactive entertainment.

Enhancing Generalization and Generation Quality

To further bolster the capabilities of LivePortrait, the researchers have implemented several key enhancements, starting with the expansion of the training dataset. By scaling up the training data to an astounding 69 million high-quality frames, the model’s generalization abilities have been significantly improved, enabling it to handle a wide range of portrait styles and scenarios with consistent accuracy and realism:

“Specifically, we first enhance a powerful implicitkeypoint-based method [5], by scaling up the training data to about 69 million high-quality portrait images, introducing a

mixed image-video training strategy, upgrading the network architecture, using the scalable motion transformation, designing the landmark-guided implicit keypoints optimization and several cascaded loss terms.”

stated in their paper.

Complementing this data-driven approach, the team has also adopted a mixed image-video training strategy, allowing the model to learn from both static images and dynamic video frames. This hybrid learning process has resulted in more natural and fluid animations, seamlessly blending the static appearance of the source portrait with the expressive motion derived from the driving data.

Upgraded Network Architecture and Compact Implicit Keypoints

Alongside the expansive training dataset and mixed learning strategy, the LivePortrait framework has also undergone architectural upgrades to support more complex motion transformations and optimization objectives. These enhancements have contributed to the overall improvement in animation quality, ensuring that the generated results are not only efficient but also visually compelling.

Moreover, the researchers have discovered that compact implicit keypoints can effectively represent a kind of blendshapes, a crucial element in facial animation. By utilizing this efficient representation, LivePortrait is able to maintain high-quality animation results while minimizing computational overhead, a crucial factor in real-time applications.

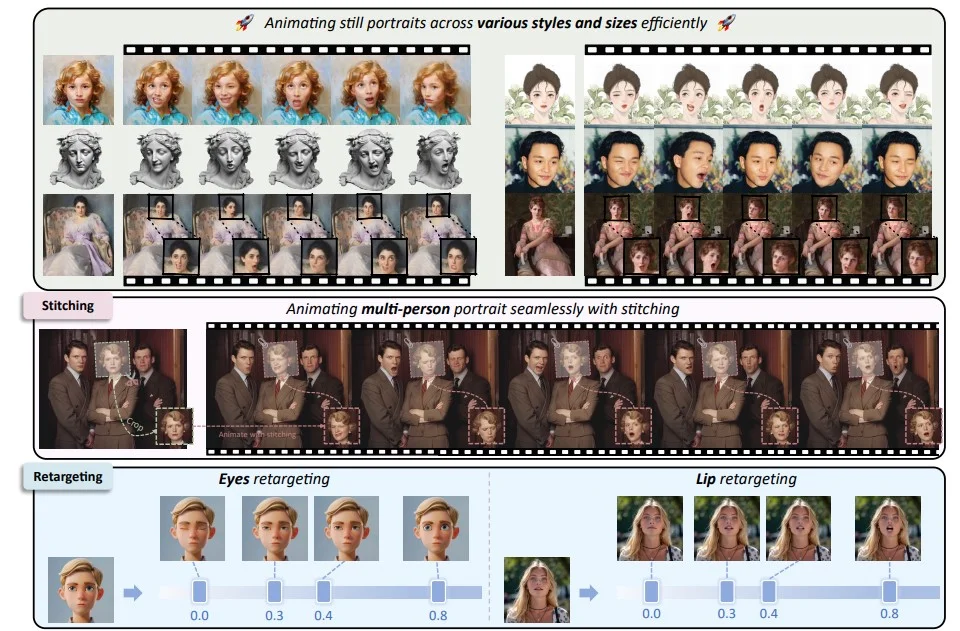

Stitching and Retargeting Modules: Enhanced Controllability

One of the standout features of LivePortrait is its meticulously designed stitching and retargeting modules, which elevate the level of control and customization available to users. These modules, powered by small Multi-Layer Perceptron (MLP) networks, introduce negligible computational overhead while enabling precise control over the animation process.

The stitching module allows for the seamless integration of animated faces back into the original source images, enabling the animation of full-body portraits and multiple faces within a single frame. This capability is particularly valuable for applications where maintaining the integrity of the original image composition is essential.

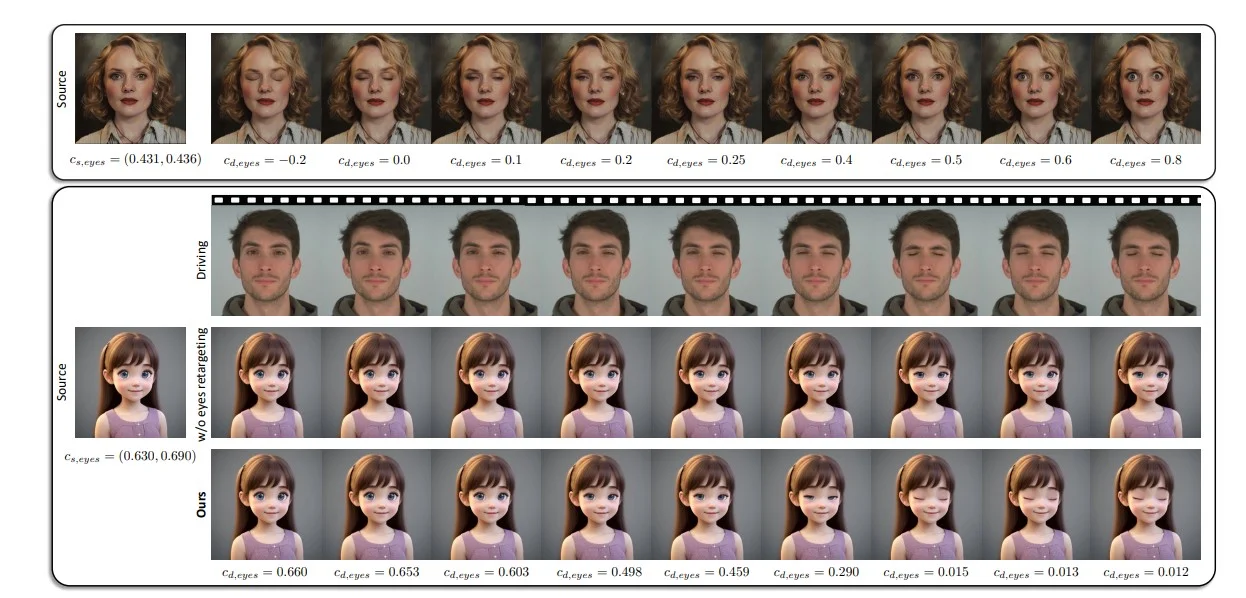

Complementing the stitching functionality, the retargeting modules provide fine-grained control over specific facial features, such as eye and lip movements. Users can now fine-tune the extent of eye-opening and lip-opening, unlocking a new level of expressiveness and creative control in the portrait animation process.

Generalization to Animal Portraits: Expanding the Creative Possibilities

The versatility of LivePortrait extends beyond human portraits, as the researchers have demonstrated its ability to generalize to animal subjects as well. By fine-tuning the model on animal data, the framework can now precisely animate the faces of cute cats, dogs, pandas, and other furry companions, opening up a world of creative possibilities for content creators and digital artists.

This cross-species animation capability broadens the potential applications of LivePortrait, empowering users to bring their animal-centric projects to life with the same level of realism and control as their human portrait animations.

Experimental Validation and Benchmarking

The effectiveness of the LivePortrait framework has been rigorously evaluated through extensive experimentation and benchmarking. Comparative analyses have shown that the model outperforms both diffusion-based and non-diffusion methods on standard metrics for portrait animation quality and motion accuracy.

The stitching and retargeting modules have demonstrated their ability to seamlessly integrate animated faces into original images and provide fine-tuned control over eye and mouth movements, further solidifying LivePortrait’s position as a cutting-edge solution in the portrait animation landscape.

Practical Applications and Ethical Considerations

The potential applications of LivePortrait span a wide range of industries, from video conferencing and social media to entertainment and creative content production. By enabling the efficient and controllable animation of static portraits, this framework can revolutionize the way digital content is created and consumed.

However, the researchers behind LivePortrait are also cognizant of the potential ethical concerns surrounding the misuse of such advanced technologies, particularly in the realm of deep fakes. To mitigate these risks, they have suggested that the visual artifacts present in the current results could serve as a deterrent, aiding in the detection of manipulated content.

Limitations and Future Developments

While LivePortrait represents a significant advancement in portrait animation, the researchers acknowledge that there is still room for improvement. One of the current limitations is the model’s ability to handle large pose variations, an area that requires further exploration and refinement.

As the field of AI-driven portrait animation continues to evolve, the LivePortrait team is committed to ongoing research and development, exploring new techniques and architectures to enhance the framework’s capabilities. The goal is to push the boundaries of what is possible in this dynamic and rapidly advancing domain, empowering creators and driving innovation.

Conclusion: Unlocking the Future of Portrait Animation

LivePortrait stands as a testament to the transformative power of AI-driven innovation in the realm of portrait animation. By striking a balance between computational efficiency and granular control, the framework has redefined the possibilities for bringing static images to life, catering to the diverse needs of content creators, digital artists, and various practical applications.

As the technology continues to evolve, the potential for even more captivating and expressive animated portraits remains limitless, paving the way for a future where the boundaries between the static and the dynamic are seamlessly blurred.

Definitions

- LivePortrait: It’s an AI-driven framework designed to animate static portraits into dynamic, lifelike visuals.

- Kuaishou Technology: A Chinese technology company known for its popular video-sharing app, Kuaishou.

- University of Science and Technology of China: A prestigious research university in China specializing in science and engineering.

- Fudan University: One of China’s most renowned universities, located in Shanghai, known for its research in various scientific fields.

- TX 4090 GPU: A high-performance graphics processing unit from NVIDIA, used for intensive computing tasks like AI and rendering.

- Video Frame: A single image or snapshot in a sequence that makes up a video.

- Multi-Layer Perceptron (MLP) Networks: A type of artificial neural network used for machine learning tasks, consisting of multiple layers of nodes.

- Deep Fakes: AI-generated synthetic media where a person’s likeness is replaced with someone else’s in videos or images, often used maliciously.

Frequently Asked Questions

1. What is LivePortrait? LivePortrait is an AI-driven framework that animates static portraits into dynamic, lifelike visuals. Developed by a team of researchers from Kuaishou Technology, University of Science and Technology of China, and Fudan University, it leverages advanced AI to create realistic animations.

2. How does LivePortrait work? LivePortrait uses an implicit-keypoint-based framework, which balances efficiency and control. This allows the model to generate animations at 12.8 milliseconds per frame on an RTX 4090 GPU, making it suitable for real-time applications like video conferencing and social media.

3. What are the key features of LivePortrait? LivePortrait features a large training dataset of 69 million high-quality frames, stitching and retargeting modules for enhanced control, and the ability to animate both human and animal portraits. It provides precise control over facial features and seamlessly integrates animated faces into original images.

4. Can LivePortrait be used for creating deep fakes? While LivePortrait has advanced capabilities, the researchers are aware of the potential for misuse in creating deep fakes. They have suggested that visual artifacts in the current results could help in detecting manipulated content, aiming to prevent unethical use.

5. What applications does LivePortrait have? LivePortrait can be used in various fields such as video conferencing, social media, entertainment, and creative content production. It offers an efficient and controllable way to animate static portraits, revolutionizing digital content creation.