Last Updated on August 26, 2024 12:33 pm by Laszlo Szabo / NowadAIs | Published on August 26, 2024 by Laszlo Szabo / NowadAIs

Jamba 1.5: AI21’s Hybrid AI 2.5x Times Faster Than All Leading Competitors – Key Notes

- Jamba 1.5 combines Transformer and Mamba architectures, offering enhanced performance and efficiency.

- The model handles long-context scenarios with a 256,000-token context window, maintaining peak performance.

- Multilingual capabilities in Jamba 1.5 make it effective in multiple languages including Spanish, French, and German.

- The model introduces the ExpertsInt8 quantization technique, allowing large-scale deployment on minimal hardware.

Introduction

Introducing Jamba 1.5, a hybrid AI marvel that seamlessly blends the strengths of Transformer and Mamba architectures, delivering unparalleled performance, efficiency, and versatility. Developed by the visionary minds at AI21, this cutting-edge technology is change what’s possible in the realm of natural language processing.

The Jamba 1.5 Advantage: A Hybrid Powerhouse

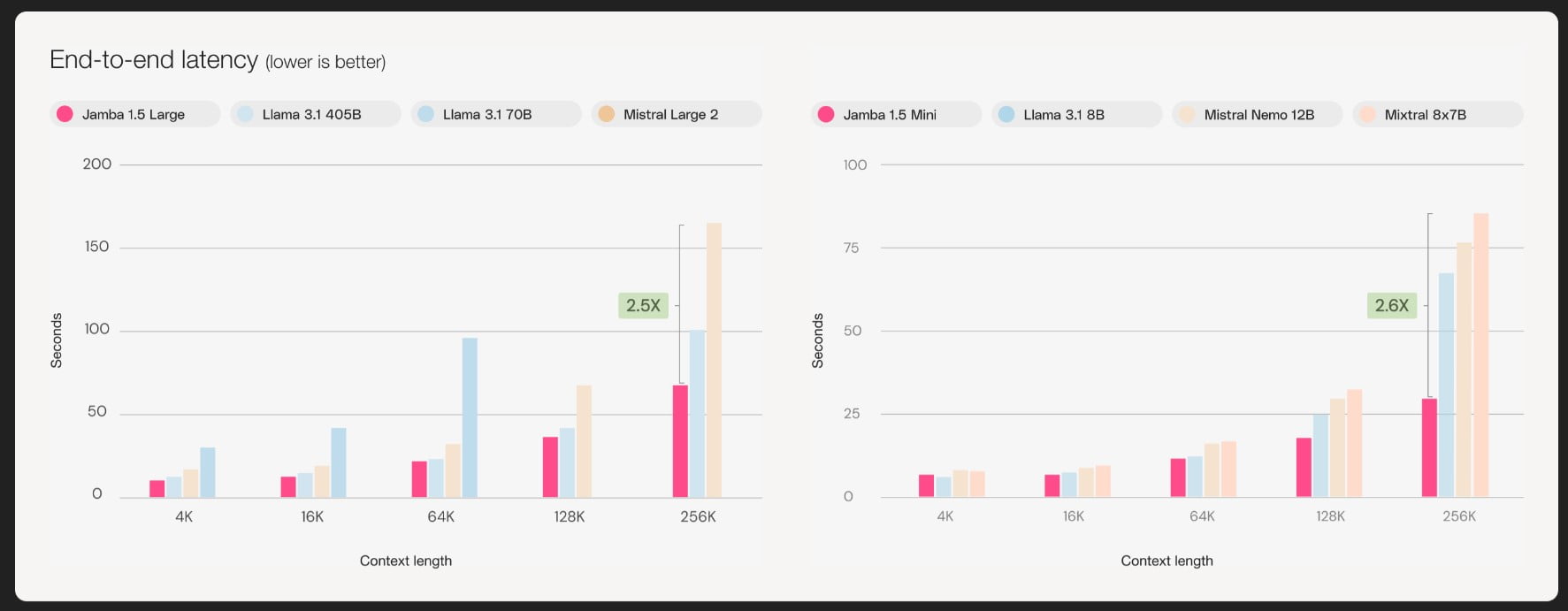

At the core of Jamba 1.5 lies a novel approach that harmonizes the best of both worlds – the robust Transformer architecture and the innovative Mamba framework. This synergistic fusion results in a hybrid model that not only excels in handling long-context scenarios but also boasts remarkable speed and efficiency, outperforming its counterparts by a staggering 2.5 times.

Unparalleled Context Handling Capabilities

One of the standout features of Jamba 1.5 is its ability to seamlessly navigate and process extensive contextual information. With an unprecedented context window of 256,000 tokens, this AI marvel empowers users to engage with and comprehend intricate narratives, complex datasets, and voluminous documents with unmatched precision and clarity.

Optimized for Speed and Efficiency

Jamba 1.5 isn’t just a powerhouse in terms of performance; it’s also a trailblazer in the realm of speed and efficiency. Leveraging its cutting-edge architecture, this model delivers lightning-fast inference times, making it an ideal choice for time-sensitive applications and real-time interactions.

A Versatile Toolbox for Diverse Applications

Whether you’re seeking to enhance customer service experiences, streamline business processes, or unlock new frontiers in research and development, Jamba 1.5 offers a versatile toolbox tailored to meet your unique needs. With capabilities ranging from function calling and structured output generation to grounded generation and language understanding, this AI empowers you to tackle a wide array of challenges with unparalleled precision and adaptability.

Multilingual Prowess: Bridging Language Barriers

Jamba 1.5 isn’t just a linguistic virtuoso in English; it also boasts proficiency in a multitude of languages, including Spanish, French, Portuguese, Italian, Dutch, German, Arabic, and Hebrew. This multilingual prowess opens up new avenues for cross-cultural communication, enabling businesses and organizations to connect with diverse audiences on a global scale.

Benchmarking Excellence

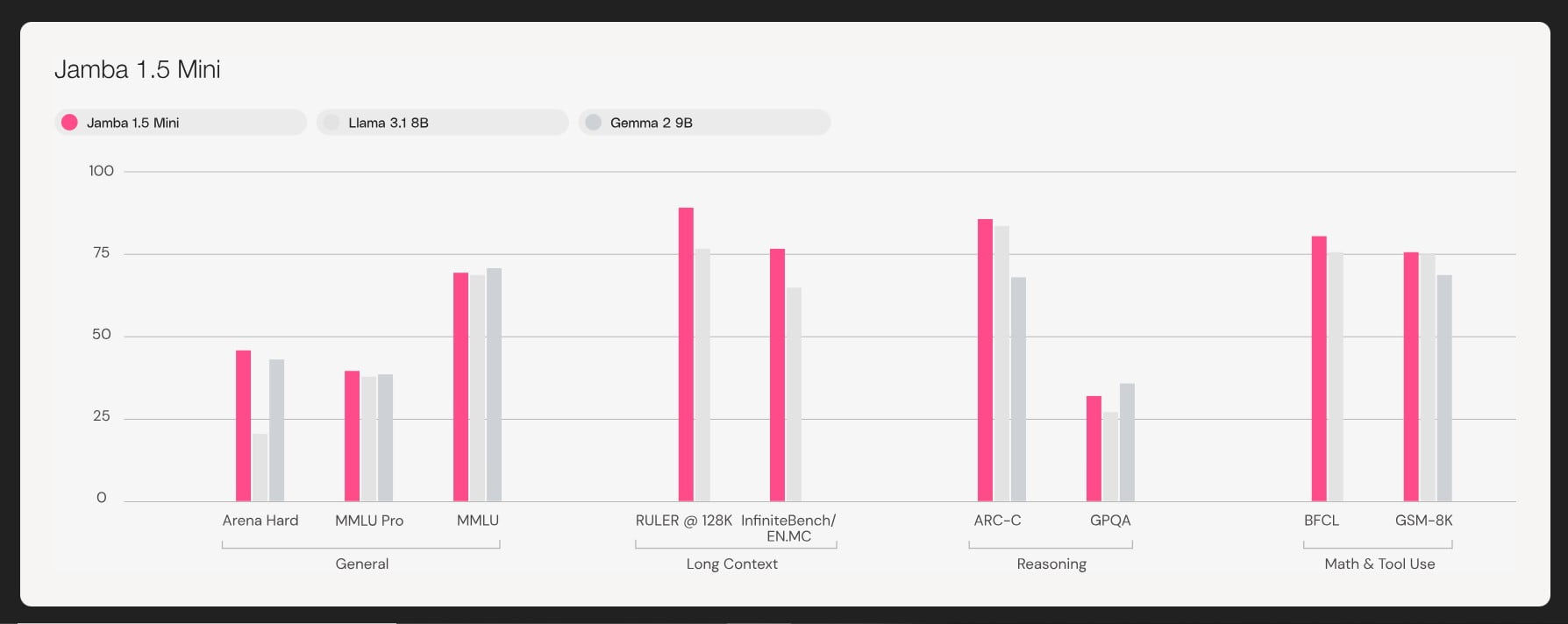

When it comes to performance benchmarks, Jamba 1.5 sets the bar high, consistently outperforming its peers across a wide range of industry-standard tests. From excelling in challenging tasks like Arena Hard and Wild Bench to delivering top-notch results on language understanding benchmarks like MMLU and GPQA, this AI model showcases its mettle time and time again.

Effective Context Length: Maintaining Peak Performance

One of the most remarkable aspects of Jamba 1.5 is its ability to maintain peak performance across the entirety of its extensive context window. Unlike many other models that experience a drop in effectiveness as context lengths increase, Jamba 1.5 consistently delivers reliable and accurate results, even when dealing with the most complex and lengthy inputs.

Quantization Innovation

To further enhance its capabilities and accessibility, AI21 has introduced a quantization technique called ExpertsInt8. This innovative approach allows Jamba 1.5 Large, the larger variant of the model family, to be deployed on a single machine with just eight 80GB GPUs, without compromising on quality or performance. This feat not only expands the model’s reach but also paves the way for more efficient and cost-effective deployments.

Seamless Integration: Deployment Options Galore

Whether you’re seeking a cloud-based solution or prefer an on-premises deployment, Jamba 1.5 offers a range of options to suit your unique requirements. From AI21’s production-grade SaaS platform to strategic partnerships with industry leaders and custom VPC and on-premises deployments, this AI marvel ensures a seamless integration into your existing infrastructure.

Empowering Enterprises: Tailored Solutions

For enterprises with unique and bespoke requirements, AI21 goes the extra mile, offering hands-on management, continuous pre-training, and fine-tuning capabilities. This personalized approach ensures that Jamba 1.5 can be tailored to meet the specific needs of your organization, unlocking new realms of productivity and innovation.

Unleashing Creativity: Tools for Developers

Jamba 1.5 isn’t just a powerhouse for enterprises; it’s also a treasure trove for developers and creators. With built-in features like function calling, JSON mode output, document objects, and citation mode, this AI model empowers developers to unleash their creativity and build applications.

Ethical and Responsible AI: A Commitment to Excellence

At the heart of Jamba 1.5’s development lies a steadfast commitment to ethical and responsible AI practices. AI21 has taken great strides to ensure that this model adheres to the highest standards of transparency, privacy, and security, fostering trust and confidence among users and stakeholders alike.

The Future of AI: Embracing Jamba 1.5

As the world continues to embrace the transformative potential of artificial intelligence, Jamba 1.5 stands as a beacon of innovation and progress. With its unparalleled capabilities, unmatched efficiency, and unwavering commitment to excellence, this amazingAI model is poised to shape the future of natural language processing and beyond. Embrace the power of Jamba 1.5 and unlock a world of possibilities!

Descriptions

- Hybrid AI Model: A combination of different AI architectures to leverage the strengths of each. Jamba 1.5 integrates Transformer and Mamba frameworks to enhance processing power and efficiency.

- Context Window: The amount of text the AI can process at one time. Jamba 1.5 has a context window of 256,000 tokens, meaning it can handle very long texts without losing accuracy.

- Quantization (ExpertsInt8): A technique to reduce the size and complexity of an AI model without sacrificing performance. This allows Jamba 1.5 Large to operate on fewer GPUs, making it more accessible.

- Function Calling: A feature that allows the AI to execute specific functions or tasks based on user input. Jamba 1.5 includes this to enable more interactive and dynamic outputs.

- Grounded Generation: The ability of AI to generate text based on specific, real-world data or sources, ensuring more accurate and reliable information.

- On-Premises Deployment: A setup where AI models are run on the physical servers within an organization, offering greater control over data and operations. Jamba 1.5 supports this alongside cloud-based solutions.

Frequently Asked Questions

- What is Jamba 1.5, and how does it differ from previous models?

Jamba 1.5 is a hybrid AI model combining Transformer and Mamba architectures. It excels in processing extensive contexts and offers superior speed and efficiency compared to earlier models. - How does Jamba 1.5 handle long-context scenarios?

With a 256,000-token context window, Jamba 1.5 can process large amounts of text while maintaining high accuracy, making it ideal for complex narratives and lengthy documents. - What languages does Jamba 1.5 support?

Jamba 1.5 is multilingual, proficient in languages like Spanish, French, German, and more, enabling it to perform well in diverse linguistic environments. - What is the ExpertsInt8 quantization in Jamba 1.5?

ExpertsInt8 is a quantization technique that reduces the model’s resource requirements. It allows Jamba 1.5 Large to run on just eight 80GB GPUs, making it more cost-effective. - Can Jamba 1.5 be deployed on-premises?

Yes, Jamba 1.5 supports both cloud-based and on-premises deployments, providing flexibility depending on the organization’s needs and infrastructure.