Last Updated on May 24, 2024 12:00 pm by Laszlo Szabo / NowadAIs | Published on May 24, 2024 by Laszlo Szabo / NowadAIs

Cohere’s Aya-23 Models: LLM in 23 Languages – Key Notes

- Aya-23 Models: Latest multilingual large language models from Cohere For AI.

- 8B and 35B Parameters: Two versions catering to different computational capabilities.

- Multilingual Ecosystem: Built on the Aya Collection, covering 114 languages.

- Performance: Significant improvements over previous models like Aya-101.

- Open Weights Release: Models available for research and development.

- Global Collaboration: Over 3000 researchers from 119 countries contributed.

- Linguistic Prowess: Superior performance in tasks like summarization, translation, and understanding.

Introduction

In the rapidly evolving landscape of natural language processing (NLP), the ability to effectively handle diverse languages has become a crucial frontier. Traditional NLP models often struggle to accommodate the nuances and complexities inherent in multilingual communication, hindering their widespread adoption. However, a new solution has emerged from the pioneering work of Cohere For AI – the Aya-23 family of multilingual language models.

Aya-23: Bridging the Multilingual Gap

Today, we launch Aya 23, a state-of-art multilingual 8B and 35B open weights release.

Aya 23 pairs a highly performant pre-trained model with the recent Aya dataset, making multilingual generative AI breakthroughs accessible to the research community. 🌍https://t.co/9HsmypAbBb pic.twitter.com/TqHlNfh6zf

— Cohere For AI (@CohereForAI) May 23, 2024

Cohere For AI, the non-profit research arm of the Canadian enterprise AI startup Cohere, has unveiled the Aya-23 models – a cutting-edge series of multilingual large language models (LLMs) that are poised to revolutionize the field of NLP. Aya-23 builds upon the success of the earlier Aya-101 model, which covered an impressive 101 languages, but with a strategic shift in focus.

Aya-23-8B: Efficiency and Accessibility

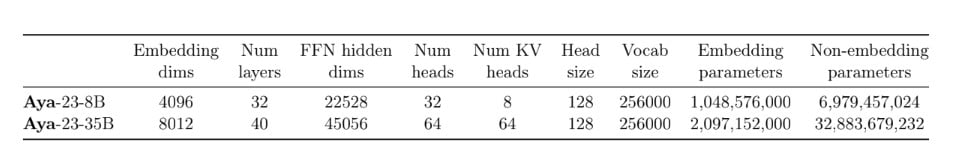

The Aya-23-8B model, featuring 8 billion parameters, is designed to strike a balance between performance and accessibility. By optimizing the model’s architecture and leveraging advanced techniques like grouped query attention and rotational positional embeddings, the Aya-23-8B delivers state-of-the-art multilingual capabilities without the need for extensive computational resources. This makes it an ideal choice for researchers and developers working with more modest hardware setups.

Aya-23-35B: Unparalleled Linguistic Prowess

For those with the computational power to harness its full potential, the Aya-23-35B model, boasting an impressive 35 billion parameters, offers unparalleled linguistic prowess. Building upon the foundation of Cohere’s Command R model, the Aya-23-35B incorporates a range of enhancements that elevate its performance in complex multilingual tasks, including natural language understanding, summarization, and translation.

Aya’s Multilingual Ecosystem

The Aya-23 models are not mere standalone achievements; they are the culmination of Cohere For AI’s broader Aya initiative – a collaborative effort involving over 3,000 independent researchers from 119 countries. This global initiative has yielded a rich ecosystem of resources, including the Aya Collection, a massive multilingual dataset of 513 million prompts and completions spanning 114 languages.

The Aya Collection: Fueling Multilingual Advancement

The Aya Collection serves as the foundation for the Aya-23 models, providing a diverse and high-quality training dataset that enables the models to excel across a wide range of languages. By leveraging this extensive resource, the Aya-23 models demonstrate superior performance compared to other widely used multilingual models, such as Gemma, Mistral, and Mixtral.

Aya-101: Laying the Groundwork

While the Aya-23 models represent the latest iteration of Cohere For AI’s multilingual efforts, the groundbreaking Aya-101 model should not be overlooked. Aya-101, released in February 2024, was a pioneering achievement in its own right, covering an unprecedented 101 languages and setting new benchmarks in massively multilingual language modeling.

Aya-23’s Performance Advantages

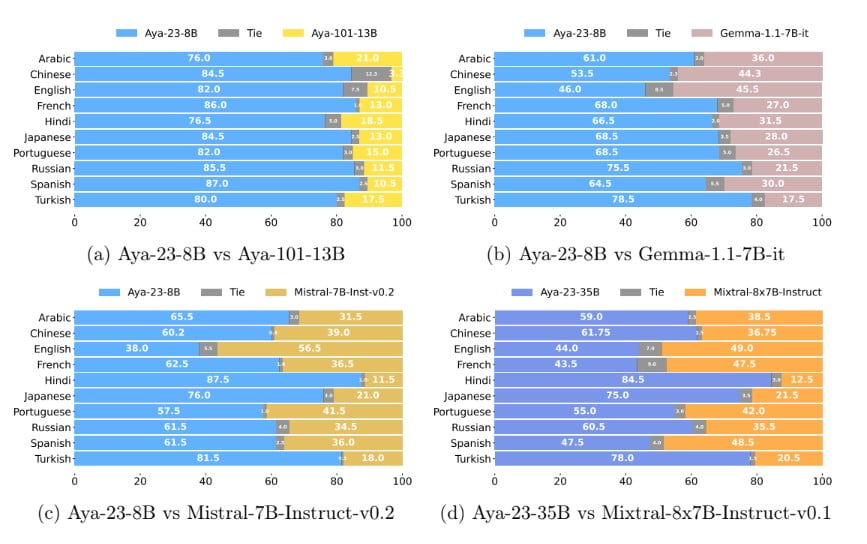

The Aya-23 models have undergone rigorous evaluation, showcasing their remarkable capabilities in multilingual tasks. Compared to their predecessor, Aya-101, the Aya-23 models demonstrate significant improvements across a range of discriminative and generative tasks, with the 8-billion parameter version achieving best-in-class multilingual performance.

Outperforming the Competition

When benchmarked against other widely used open-source models, such as Gemma, Mistral, and Mixtral, the Aya-23 models consistently outperform their counterparts. The researchers report improvements of up to 14% in discriminative tasks, 20% in generative tasks, and a remarkable 41.6% increase in multilingual mathematical reasoning compared to Aya-101.

Consistent Quality Across Languages

One of the standout features of the Aya-23 models is their ability to maintain a high level of consistency and coherence in the text they generate, regardless of the target language. This is a crucial advantage for applications that require seamless multilingual support, such as translation services, content creation, and conversational agents.

Accessibility and Open-Source Commitment

Cohere For AI’s commitment to advancing multilingual AI research extends beyond the technical achievements of the Aya-23 models. The company has made a concerted effort to ensure that these powerful tools are accessible to a wide range of researchers and developers.

Open Weights Release

Cohere For AI has released the open weights for both the Aya-23-8B and Aya-23-35B models, empowering the research community to further explore, fine-tune, and build upon these state-of-the-art multilingual models. This open-source approach aligns with the company’s mission to democratize access to cutting-edge AI technology.

Empowering the Research Community

By providing the open weights, Cohere For AI aims to inspire and empower researchers and practitioners to push the boundaries of multilingual AI. This gesture not only facilitates the advancement of the field but also fosters a collaborative spirit, where the global research community can collectively work towards addressing the challenges of language barriers in technology.

The Aya Initiative: A Paradigm Shift in Multilingual AI

The Aya-23 models are not merely the latest products of Cohere For AI’s research efforts; they are part of a broader initiative that is reshaping the landscape of multilingual AI. The Aya project, which has garnered the participation of over 3,000 independent researchers from 119 countries, represents a paradigm shift in how the machine learning community approaches the challenges of multilingual language modeling.

Democratizing Multilingual AI

By leveraging the diverse perspectives and expertise of researchers from around the world, the Aya initiative has created a rich tapestry of knowledge and resources that are now accessible to the broader community. This collaborative approach has enabled the development of models like Aya-23, which cater to the linguistic needs of nearly half the global population.

Empowering Underserved Languages

“In our evaluation, we focus on 23 languages that are covered by the new Aya model family. These 23 languages are: Arabic, Chinese (simplified & traditional), Czech, Dutch, English, French, German, Greek, Hebrew, Hindi, Indonesian, Italian, Japanese, Korean, Persian, Polish, Portuguese, Romanian, Russian, Spanish, Turkish, Ukrainian and Vietnamese.”

you can read in Cohere’s paper.

One of the key drivers behind the Aya initiative is the recognition that the field of AI has historically been dominated by a handful of languages, leaving many others underrepresented and underserved. The Aya-23 models, with their support for 23 languages, including rare and low-resource ones, represent a significant step towards addressing this imbalance and ensuring that more languages are treated as first-class citizens in the rapidly evolving world of generative AI.

The Future of Multilingual AI: Aya-23 and Beyond

The introduction of the Aya-23 models marks a pivotal moment in the ongoing journey of multilingual AI research and development. As the field continues to evolve, the Aya-23 models and the broader Aya initiative stand as beacons of progress, inspiring researchers and practitioners to push the boundaries of what is possible in the realm of natural language processing.

Driving Continuous Improvement

Cohere For AI’s commitment to the Aya project ensures that the journey of multilingual AI advancement will not end with the Aya-23 models. The company’s continued investment in research and development, coupled with the collaborative efforts of the global Aya community, will undoubtedly lead to further refinements, enhancements, and breakthroughs in the years to come.

Conclusion

The Aya-23 models from Cohere For AI represent a groundbreaking leap forward in the world of multilingual NLP. By leveraging the power of the Aya ecosystem, these models have set new benchmarks in performance, accessibility, and linguistic coverage. As the research community embraces the open-source nature of the Aya-23 models, the future of multilingual AI promises to be one of boundless innovation, collaboration, and the empowerment of diverse languages and cultures worldwide.

Definitions

- Cohere’s Aya-23 Models: A series of state-of-the-art multilingual large language models developed by Cohere For AI, designed to handle diverse languages and complex NLP tasks.

- Natural Language Processing (NLP): A field of AI that focuses on the interaction between computers and humans through natural language.

- LLM Models: Large language models that use machine learning techniques to understand, generate, and translate human language on a large scale.

- AI Ecosystem: The interconnected environment of AI tools, resources, and research that supports the development and application of artificial intelligence.

- Aya Collection: A massive multilingual dataset consisting of 513 million prompts and completions across 114 languages, used to train Aya-23 models.

- Gemma, Mistral, and Mixtral: Other widely used multilingual models that Aya-23 outperforms in various NLP tasks.

Frequently Asked Questions

- What are Cohere’s Aya-23 Models? Cohere’s Aya-23 Models are advanced multilingual large language models designed to handle a wide range of languages and NLP tasks. They come in two versions: Aya-23-8B and Aya-23-35B, with 8 billion and 35 billion parameters respectively.

- How do Aya-23 Models improve over previous versions like Aya-101? The Aya-23 Models show significant improvements over Aya-101, with enhanced performance in tasks such as natural language understanding, summarization, and translation. They also offer better multilingual mathematical reasoning and overall linguistic capabilities.

- What is the Aya Collection and how does it support the Aya-23 Models? The Aya Collection is a comprehensive multilingual dataset that includes 513 million prompts and completions across 114 languages. It serves as the training foundation for the Aya-23 Models, enabling them to excel in various multilingual tasks.

- Why is the open weights release of Aya-23 Models important? The open weights release allows researchers and developers to access, fine-tune, and build upon the Aya-23 Models. This democratizes access to cutting-edge AI technology and fosters a collaborative environment for advancing multilingual NLP.

- What sets Aya-23 Models apart from other multilingual models like Gemma and Mistral? Aya-23 Models outperform Gemma, Mistral, and other multilingual models by up to 20% in generative tasks and 41.6% in multilingual mathematical reasoning. They maintain consistent quality across languages and are designed for both high-performance and accessible use cases.