Last Updated on September 21, 2024 12:43 pm by Laszlo Szabo / NowadAIs | Published on September 21, 2024 by Laszlo Szabo / NowadAIs

Key Notes for Alibaba’s Qwen2.5: The AI Swiss Army Knife That’s Beating OpenAI’s ChatGPT-4

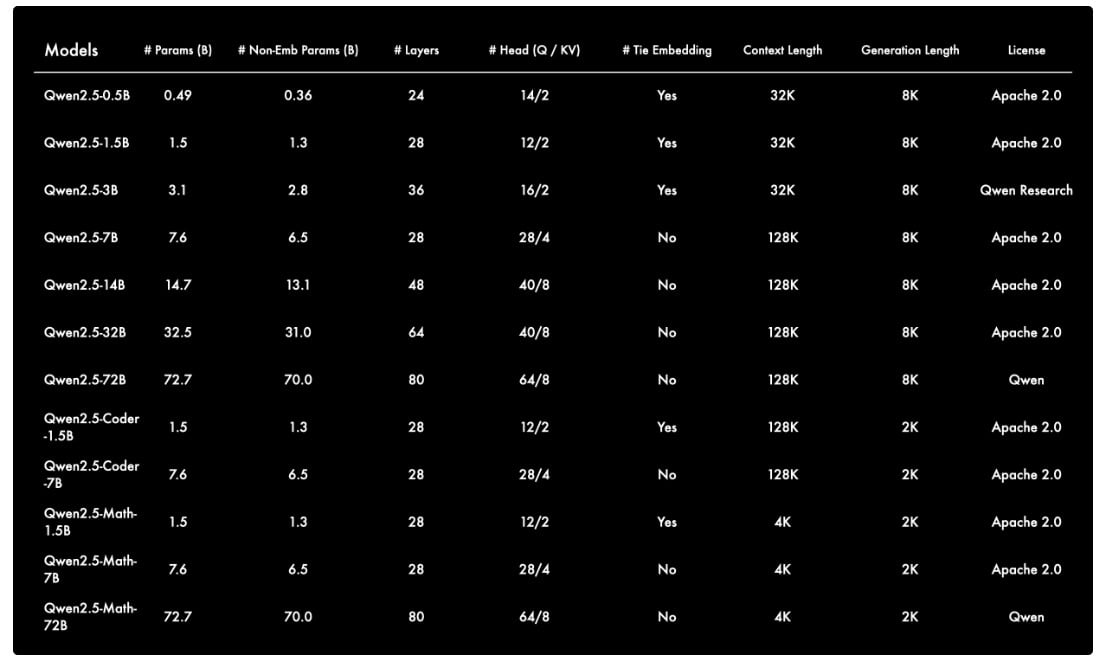

- Qwen2.5 includes models for general tasks, coding, and mathematics, with sizes ranging from 0.5B to 72B parameters

- Outperforms larger models like Llama-3.1-405B in language understanding, reasoning, coding, and math

- Supports over 29 languages and offers open-source versions for wider accessibility

Qwen2.5: The Latest AI Powerhouse

Alibaba Cloud has once again made waves with the unveiling of its latest large language model (LLM) series – Qwen2.5.

Qwen2.5 is not just a single model, but a comprehensive suite of AI tools designed to cater to a diverse range of needs. The series includes base models, specialized variants for coding and mathematics, and a range of sizes to accommodate various computational requirements.

The series offers a diverse array of models, ranging from the compact 0.5 billion parameter variant to the powerful 72 billion parameter model. This wide spectrum of options allows users to select the most appropriate tool for their specific needs, striking a balance between performance and computational efficiency.

Unparalleled Language Understanding

At the core of the Qwen2.5 series is the general-purpose language model, which has showcased remarkable advancements in natural language understanding. Benchmarked against leading alternatives, the Qwen2.5-72B model has outperformed its peers across a wide range of tasks, including general question answering, reasoning, and language comprehension.

The model’s ability to process context-rich inputs of up to 128,000 tokens and generate detailed responses of up to 8,192 tokens further enhances its versatility. This makes Qwen2.5 an ideal choice for applications that require extensive textual analysis and generation, such as content creation, legal document processing, and technical writing.

Breakthrough in Coding and Mathematics

Recognizing the growing importance of AI-powered programming and mathematical reasoning, Alibaba Cloud has introduced specialized variants within the Qwen2.5 series – the Qwen2.5-Coder and Qwen2.5-Math models.

The Qwen2.5-Coder model has demonstrated exceptional performance in coding tasks, outperforming larger models in several benchmarks. Its ability to handle a wide range of programming languages, from Python to C++, makes it a valuable asset for software development teams, automated code generation, and programming education.

Similarly, the Qwen2.5-Math model has showcased remarkable mathematical reasoning capabilities, surpassing even larger models in complex competitions like AIME2024 and AMC2023. By combining various reasoning methods, including chain thinking, procedural thinking, and tool-integrated reasoning, this model has the potential to revolutionize the way we approach mathematical problem-solving.

Multimodal Advancements

Alibaba Cloud’s commitment to pushing the boundaries of AI extends beyond language models. The company has also made significant strides in multimodal capabilities, with the introduction of innovative text-to-video and enhanced vision-language models.

The text-to-video model, part of the Wanxiang large model family, can transform static images into dynamic content driven by text prompts in both Chinese and English. This technology holds immense potential for content creation, video production, and even virtual reality applications.

Furthermore, the Qwen2-VL model has been upgraded to comprehend videos up to 20 minutes in length and support video-based question-answering. This advancement paves the way for seamless integration of AI-powered visual understanding into mobile devices, automobiles, and robotics, enabling a wide range of automated operations.

Open-sourcing and Accessibility

Recognizing the importance of democratizing access to advanced AI technology, Alibaba Cloud has open-sourced several models within the Qwen2.5 series, including the 0.5 billion, 1.5 billion, 7 billion, 14 billion, and 32 billion parameter variants.

By making these models freely available, Alibaba Cloud is empowering researchers, developers, and organizations of all sizes to leverage the power of Qwen2.5 in their own projects and applications.

Benchmarking and Performance Insights

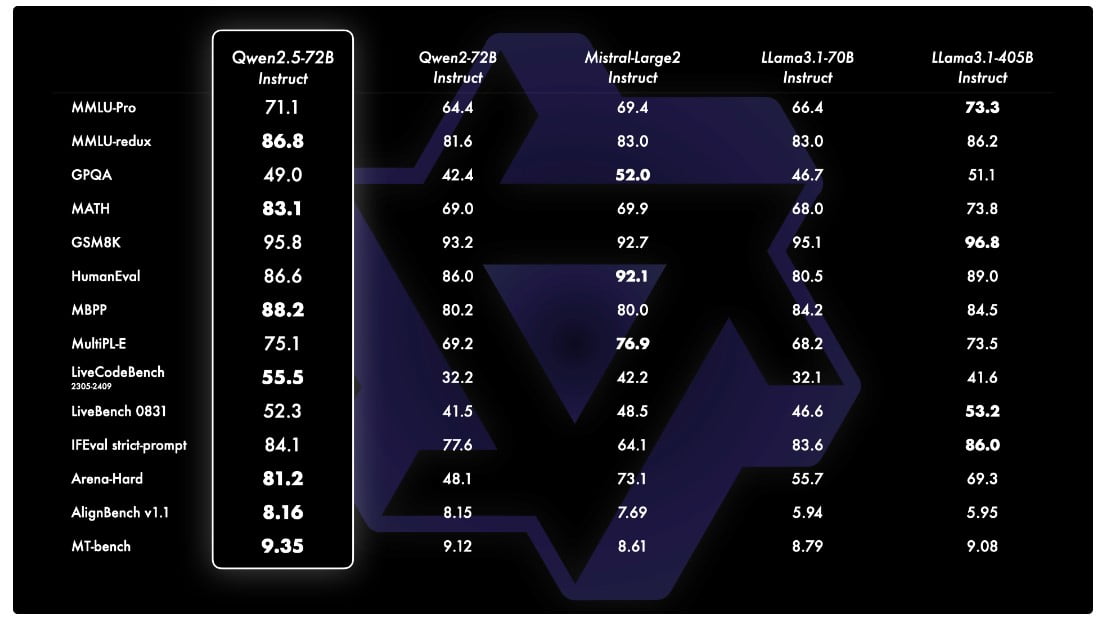

The Qwen2.5 series has undergone extensive benchmarking, showcasing its exceptional performance across a wide range of tasks and datasets. The flagship Qwen2.5-72B model has consistently outperformed its competitors, including the larger Llama-3.1-405B, in areas such as language understanding, reasoning, coding, and mathematics.

Excelling in General Tasks

On the MMLU (Massive Multitask Language Understanding) benchmark, the Qwen2.5-72B model achieved a score of 86.1, surpassing the performance of both Llama-3.1-70B and Mistral-Large-V2. This impressive result demonstrates the model’s robust language comprehension capabilities.

Similarly, the Qwen2.5-72B excelled in the BBH (Benchmark for Broad-coverage Human Evaluation) task, scoring 86.3 and outperforming its competitors. This benchmark assesses the model’s ability to engage in natural conversations and provide relevant, coherent responses.

Advancing in Coding and Mathematics

The Qwen2.5 series has also made significant strides in coding and mathematical reasoning. The Qwen2.5-Coder model, for instance, achieved a remarkable score of 55.5 on the LiveCodeBench (2305-2409) task, outperforming the Qwen2-72B-Instruct by a wide margin.

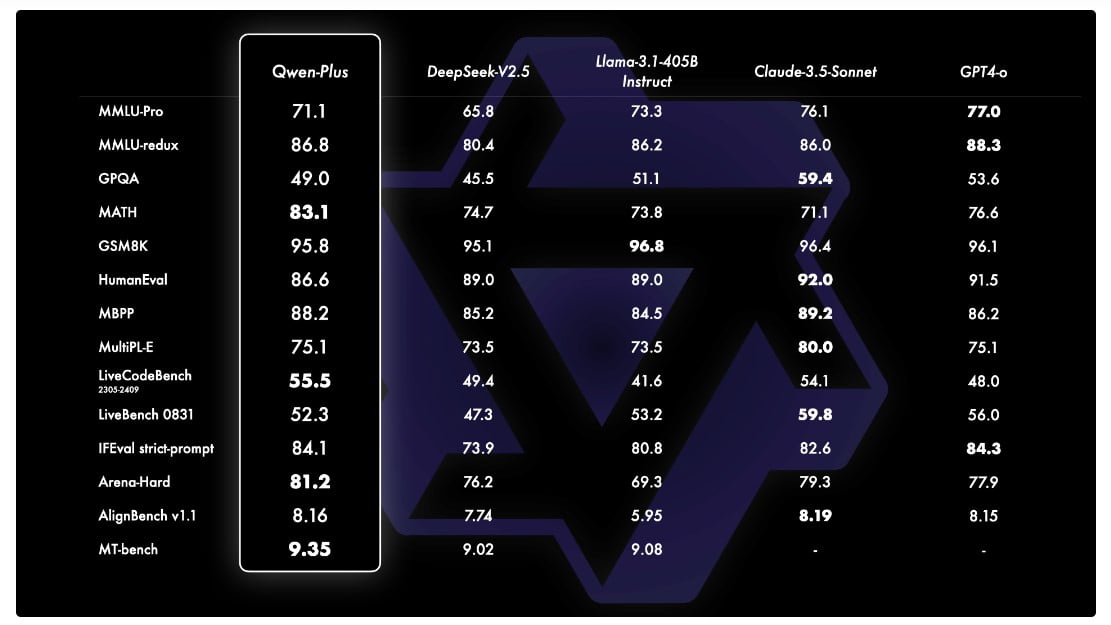

When it comes to mathematical aptitude, the Qwen2.5-Math-72B-Instruct model scored an impressive 83.1 on the MATH benchmark, demonstrating its ability to handle complex mathematical problems. This performance surpasses that of models like GPT-4o, Claude 3.5 Sonnet, and Llama-3.1-405B.

Multilingual Prowess

Recognizing the global nature of AI applications, the Qwen2.5 series boasts impressive multilingual capabilities, supporting over 29 languages, including Chinese, English, French, Spanish, Portuguese, German, Italian, Russian, Japanese, Korean, Vietnamese, Thai, and Arabic.

The models have been evaluated on various multilingual benchmarks, such as IFEval (Multilingual), AMMLU (Arabic), JMMLU (Japanese), KMMLU (Korean), IndoMMLU (Indonesian), and TurkishMMLU (Turkish). The Qwen2.5-72B-Instruct model has achieved exceptional results, often outperforming its competitors in these cross-linguistic tasks.

Qwen-Plus and Qwen-Turbo: Unlocking Advanced API Services

In addition to the open-sourced models, Alibaba Cloud is also offering advanced API services through Qwen-Plus and Qwen-Turbo. These platforms provide access to the most powerful Qwen2.5 variants, including the 3 billion and 72 billion parameter models, which are not available for direct open-sourcing.

The Qwen-Plus and Qwen-Turbo services are designed to cater to the needs of enterprises and developers who require the utmost performance and capabilities from their AI models. These API-based solutions offer seamless integration and scalability, making them ideal for large-scale deployments and mission-critical applications.

The Future of Qwen2.5 and AI Innovation

The release of Qwen2.5 marks a significant milestone in Alibaba Cloud’s ongoing efforts to push the boundaries of artificial intelligence. As the company continues to invest heavily in AI research and development, we can expect to see even more impressive advancements in the future.

Some potential areas of focus for the Qwen team may include further enhancing the models’ reasoning capabilities, improving their ability to understand and generate more nuanced and context-aware responses, and exploring deeper integration with other AI technologies, such as computer vision and speech recognition.

Additionally, the open-sourcing of Qwen2.5 models is a testament to Alibaba Cloud’s commitment to fostering a vibrant AI ecosystem. As researchers and developers worldwide leverage these powerful tools, we can anticipate a surge of innovative applications and breakthroughs that will shape the future of artificial intelligence.

Descriptions:

- Large Language Model (LLM): An AI system trained on vast amounts of text data to understand and generate human-like language

- Parameters: The variables that an AI model learns during training, with more parameters generally indicating a more complex and capable model

- Tokens: Units of text that the AI processes, which can be words or parts of words

- Benchmarks: Standardized tests used to compare the performance of different AI models

- MMLU: Massive Multitask Language Understanding, a benchmark that tests an AI’s knowledge across various subjects

- BBH: Benchmark for Broad-coverage Human Evaluation, which assesses an AI’s ability to engage in natural conversations

- Open-source: Making the code and model freely available for anyone to use, modify, or study

- API: Application Programming Interface, a way for different software applications to communicate and share data

Frequently Asked Questions:

- What makes Alibaba’s Qwen2.5 different from other AI models? Alibaba’s Qwen2.5 is a series of models designed for various tasks, including general language understanding, coding, and mathematics. It outperforms larger models in several benchmarks and offers versions with different sizes to suit various computational needs.

- Can Alibaba’s Qwen2.5 handle multiple languages? Yes, Alibaba’s Qwen2.5 supports over 29 languages, including Chinese, English, French, Spanish, and Arabic. It has performed exceptionally well on multilingual benchmarks, often surpassing competitors in cross-linguistic tasks.

- Is Alibaba’s Qwen2.5 available for public use? Alibaba has open-sourced several versions of Qwen2.5, including models with 0.5 billion to 32 billion parameters. The most powerful versions (3 billion and 72 billion parameters) are available through API services called Qwen-Plus and Qwen-Turbo.

- How does Alibaba’s Qwen2.5 perform in coding tasks? Alibaba’s Qwen2.5 includes a specialized Qwen2.5-Coder model that has shown exceptional performance in coding tasks. It outperforms larger models in several benchmarks and can handle a wide range of programming languages.

- What are the potential applications of Alibaba’s Qwen2.5? Alibaba’s Qwen2.5 has potential applications in various fields, including content creation, legal document processing, software development, mathematical problem-solving, and even multimodal tasks like text-to-video generation. Its versatility makes it suitable for both research and practical business applications.