Last Updated on April 16, 2024 8:34 am by Laszlo Szabo / NowadAIs | Published on April 16, 2024 by Laszlo Szabo / NowadAIs

AI Understands Your Home – Know Meta’s OpenEQA: Open-Vocabulary Embodied Question Answering Benchmark – Key Notes

- Meta OpenEQA is a benchmark introduced by Meta AI to assess AI’s understanding of physical environments through open-vocabulary questions.

- It aims to enhance vision and language models (VLMs) to achieve closer-to-human performance.

- Consists of two tasks: episodic memory EQA and active EQA, testing AI’s memory retrieval and environmental interaction respectively.

- Foundational for practical applications such as aiding in daily tasks through smart devices.

- Indicates a significant gap between current AI and human spatial understanding, highlighting the need for further advancements.

Introduction

In the quest for artificial general intelligence (AGI), Meta AI, firm of the parent company of Facebook, has introduced a benchmark called Meta OpenEQA. This Open-Vocabulary Embodied Question Answering Benchmark aims to bridge the gap between current vision and language models (VLMs) and human-level performance in understanding physical spaces. The benchmark assesses an AI agent’s ability to comprehend its environment through open-vocabulary questions, paving the way for advancements in AGI.

The Need for Embodied AI

Imagine a world where AI agents act as the brain of home robots or smart glasses, capable of leveraging sensory modalities like vision to understand and communicate with humans effectively. This ambitious goal requires AI agents to develop a comprehensive understanding of the external world, commonly referred to as a

“world model.”

Meta AI recognizes that achieving this level of comprehension is a daunting research challenge, but one that is essential for the development of AGI.

Traditional language models have made significant strides in linguistic understanding but lack real-time comprehension of the world around them. Meta AI aims to enhance these models by incorporating visual information, enabling them to make sense of their environment and provide meaningful responses to user queries. By combining vision and language, embodied AI agents have the potential to revolutionize various domains, from smart homes to wearables.

Introducing Meta OpenEQA

With the goal of advancing embodied AI, Meta AI has introduced the Open-Vocabulary Embodied Question Answering (OpenEQA) framework. This benchmark measures an AI agent’s understanding of its environment by subjecting it to open-vocabulary questions. OpenEQA consists of two tasks: episodic memory EQA and active EQA.

Episodic memory EQA requires the AI agent to answer questions based on its recollection of past experiences. This task tests the agent’s ability to retrieve relevant information from its memory to provide accurate responses. On the other hand, active EQA involves the AI agent actively exploring its environment to gather necessary information and answer questions. This task evaluates the agent’s capability to interact with the physical world and utilize its surroundings to derive insights.

The Significance of EQA

Embodied Question Answering (EQA) holds immense practical implications beyond research. Even a basic version of EQA can simplify everyday life. For instance, imagine you are getting ready to leave the house but can’t find your office badge. With EQA, you can simply ask your smart glasses or home robot where you left it, and the AI agent can leverage its episodic memory to provide you with the answer. EQA has the potential to enhance human-machine interactions and make AI agents indispensable companions in our daily lives.

Meta’s OpenEQA is not just about practical applications; it also serves as a tool to probe an AI agent’s understanding of the world. Similar to how we assess human comprehension, OpenEQA evaluates an AI agent’s ability to answer questions accurately and coherently. By releasing this benchmark, Meta AI aims to motivate and facilitate open research into improving AI agents’ understanding and communication skills, a crucial step towards achieving AGI.

The Gap Between VLMs and Human Performance

Meta AI conducted extensive benchmarking of state-of-the-art vision and language models (VLMs) using OpenEQA. The results revealed a gap between the performance of the best VLMs and human-level understanding. Particularly concerning questions that require spatial understanding, even the most advanced VLMs were found to be

“nearly blind.”

In other words, access to visual content did not significantly improve their performance compared to language-only models.

For example, when asked the question,

“I’m sitting on the living room couch watching TV. Which room is directly behind me?”,

the models provided random guesses without leveraging visual episodic memory that should provide an understanding of the space. This indicates that there is a need for further improvement in both perception and reasoning capabilities of VLMs before embodied AI agents powered by these models are ready for widespread use.

Meta’s OpenEQA: A Novel Benchmark for Embodied AI

Meta’s OpenEQA sets a new standard in evaluating embodied AI agents’ performance. It is the first open-vocabulary benchmark for EQA, providing researchers with a comprehensive framework to measure and track progress in multimodal learning and scene understanding. The benchmark comprises over 1,600 non-templated question-and-answer pairs, representative of real-world use cases, validated by human annotators. Additionally, it includes more than 180 videos and scans of physical environments, enabling AI agents to interact with realistic scenarios.

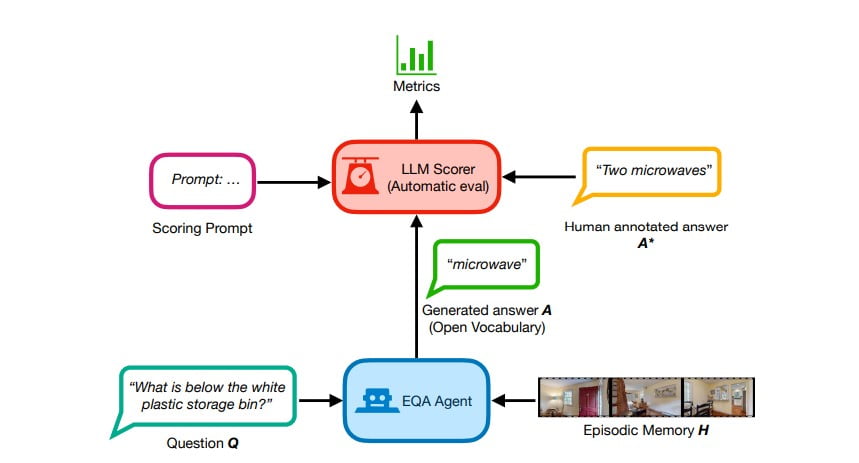

To evaluate the open vocabulary answers generated by AI agents, Meta’s OpenEQA introduces LLM-Match, an automatic evaluation metric. Through blind user studies, Meta AI has found that LLM-Match correlates closely with human judgments, demonstrating its effectiveness as an evaluation criterion. The benchmark’s comprehensive nature and robust evaluation metric provide researchers with a reliable tool to gauge the performance of their models and identify areas for improvement.

Advancing Embodied AI with Meta’s OpenEQA

Meta’s OpenEQA is a development that paves the way for advancements in embodied AI. By providing a benchmark that assesses an AI agent’s understanding of its environment, Meta AI encourages researchers to strive for better spatial comprehension and effective communication capabilities. The benchmark’s focus on open-vocabulary questions and real-world scenarios empowers researchers to develop AI agents that can navigate and interact with the physical world in a manner similar to humans.

Meta AI is actively working on building world models capable of performing well on OpenEQA and invites researchers worldwide to join them in this endeavor. The official release of the benchmark sets the stage for collaborative research and innovation can be found here – it pushing the boundaries of AI and bringing us closer to the realization of artificial general intelligence.

Definitions

- Meta: Formerly Facebook, Meta is a tech conglomerate known for pushing the boundaries in social media, virtual reality, and artificial intelligence research.

- Artificial Generative Intelligence: This refers to AI systems that can generate human-like text, images, or ideas based on training from large datasets.

- Meta’s OpenEQA: An open-vocabulary, embodied question-answering benchmark developed by Meta AI to evaluate and enhance AI’s understanding of and interaction with its physical environment.

- Vision and Language Models (VLMs): AI systems that integrate visual processing with language understanding to interpret and respond to multimodal inputs.

- Meta AI: A division of Meta dedicated to advancing AI technology, focusing on creating models that improve human-AI interaction and comprehension.

Frequently Asked Questions

- What is Meta’s OpenEQA and how does it impact AI research? Meta OpenEQA is a benchmark tool designed by Meta AI to test and enhance the ability of AI agents to understand and interact with their physical environment using open-vocabulary questions. It serves as a crucial step toward developing AI that can operate effectively in real-world settings.

- Why is the development of Meta’s OpenEQA significant for the future of smart devices? By improving how AI understands spatial environments, Meta’s OpenEQA paves the way for smarter, more intuitive AI agents in devices like robots and smart glasses, making technology more helpful in everyday tasks.

- How does Meta’s OpenEQA differ from traditional AI benchmarks? Unlike traditional benchmarks that may focus on either language or visual understanding, Meta’s OpenEQA combines these elements to evaluate AI’s multimodal comprehension and interaction within a 3D environment.

- What challenges does Meta’s OpenEQA address in the field of artificial intelligence? Meta’s OpenEQA addresses the challenge of creating AI that can understand context and perform tasks in a manner similar to humans, especially in navigating and responding to real-world environments.

- What future advancements can we expect from Meta’s OpenEQA? As Meta’s OpenEQA continues to evolve, we can expect advancements that lead to AI models with better spatial awareness and more effective communication skills, which are essential for the practical deployment of AI in everyday scenarios.