Last Updated on May 31, 2024 1:21 pm by Laszlo Szabo / NowadAIs | Published on May 28, 2024 by Laszlo Szabo / NowadAIs

AI for Deaf People: Introducing SignLLM – Key Notes

- SignLLM: A pioneering multilingual sign language production model.

- Prompt2Sign Dataset: A diverse dataset supporting the training of SignLLM.

- Multilingual Capability: Supports eight distinct sign languages.

- Reinforcement Learning: Enhances training efficiency and model quality.

- Text-to-Gloss Integration: Ensures linguistically accurate sign language output.

- Qualitative Improvements: Achieves realistic and visually compelling sign language gestures.

- Ablation Studies: Identifies key drivers of model success.

Introduction

Sign language is a vital means of communication for millions of individuals worldwide, yet the development of technologies to support and enhance sign language production has lagged behind advancements in spoken language processing. That is, until the work of the researchers behind SignLLM – the first comprehensive multilingual sign language production model.

Now we delve into the innovative SignLLM framework, exploring its foundations, key features, and the transformative impact it promises to have on the field of sign language technology. From the creation of the Prompt2Sign dataset to the development of novel sign language generation techniques, this exploration will shed light on how SignLLM is redefining the boundaries of what is possible in sign language production.

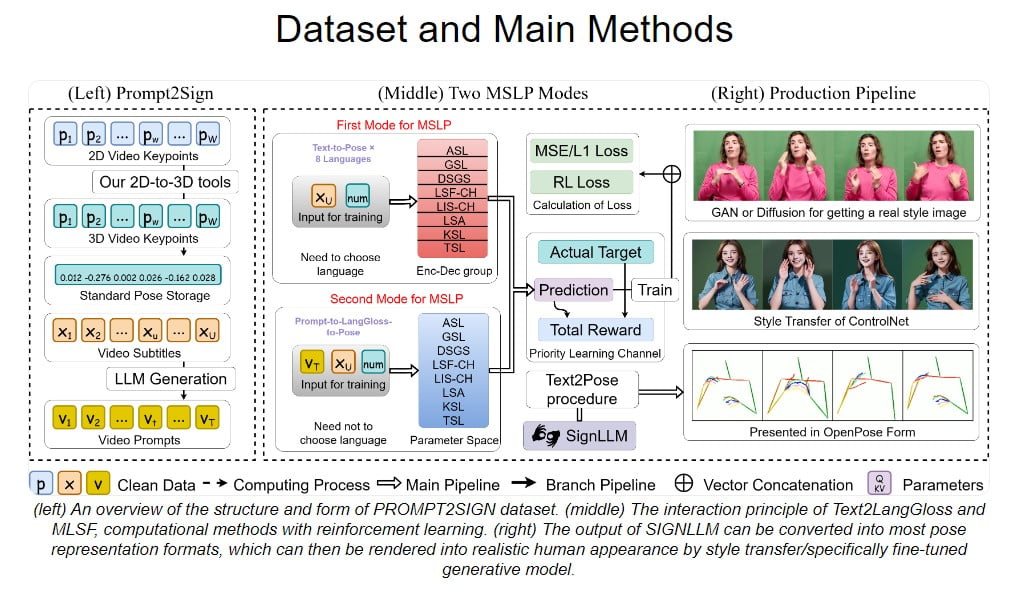

The Prompt2Sign Dataset: Laying the Foundation

At the heart of the SignLLM project lies the Prompt2Sign dataset – a pioneering resource that brings together sign language data from a diverse array of sources, including American Sign Language (ASL) and seven other sign languages. By meticulously transforming a vast collection of videos into a streamlined, model-friendly format, the Prompt2Sign dataset has laid the groundwork for the development of advanced sign language production technologies.

One of the key challenges in creating this dataset was the need to optimize the data for training with translation models like seq2seq and text2text. The researchers tackled this challenge head-on, developing innovative techniques to ensure the data was not only comprehensive but also perfectly suited for the training of cutting-edge sign language generation models.

Introducing SignLLM: LLM for Good

Building upon the foundation of the Prompt2Sign dataset, the SignLLM team has developed a newsign language production model that sets a new standard in the field. This multilingual model, the first of its kind, boasts two novel SLP (Sign Language Production) modes that enable the generation of sign language gestures from input text or prompts.

At the core of SignLLM’s success is its ability to leverage a new loss function and a reinforcement learning-based module. These components work in tandem to accelerate the training process, empowering the model to autonomously sample high-quality data and enhance its sign language generation capabilities.

Multilingual Mastery: SignLLM’s Capabilities

One of the most remarkable aspects of SignLLM is its ability to seamlessly handle sign language production across multiple languages. By harnessing the breadth of the Prompt2Sign dataset, the model has demonstrated state-of-the-art performance on SLP tasks in eight distinct sign languages, a testament to its versatility and adaptability.

Through extensive benchmarking, the researchers have showcased SignLLM’s prowess in areas such as American Sign Language Production (ASLP), German Sign Language Production (GSLP), and beyond. These empirical studies have not only validated the model’s effectiveness but have also provided valuable insights into the nuances and complexities of sign language generation.

Reinforcement Learning: Accelerating the Training Process

A key innovation at the heart of SignLLM is its incorporation of reinforcement learning techniques. By leveraging this approach, the researchers have been able to significantly enhance the model’s ability to autonomously sample high-quality data, thereby accelerating the training process and improving the overall quality of the generated sign language gestures.

Through an iterative update process that involves the user, agent, environment, and a Priority Learning Channel (PLC), SignLLM’s reinforcement learning module has demonstrated its ability to optimize the model’s performance, leading to impressive results across a range of sign language production tasks.

Enhancing Sign Language Production through Text-to-Gloss Integration

In addition to its reinforcement learning capabilities, SignLLM has also benefited from the integration of a Text-to-Gloss framework. This allows the model to produce sign language gloss with the necessary linguistic attributes, while also capturing profound characteristics through the use of variables within the neural network architecture.

By seamlessly blending these textual and gestural elements, SignLLM has been able to generate sign language output that is not only visually compelling but also linguistically accurate and expressive. This integration of text-to-gloss techniques has been a crucial factor in the model’s ability to achieve state-of-the-art performance in sign language production.

Qualitative Improvements: Enhancing the Realism of Sign Language Gestures

Beyond its impressive quantitative performance, SignLLM has also made significant strides in improving the qualitative aspects of sign language generation. Through the incorporation of style transfer models and fine-tuned generative approaches, the team has been able to render the model’s output in a more realistic and visually appealing manner.

The result is a set of synthetic sign language videos that capture the nuances and subtleties of human sign language gestures with remarkable fidelity. This advancement in the visual quality of the generated content not only enhances the user experience but also paves the way for more seamless integration of sign language technology into various applications.

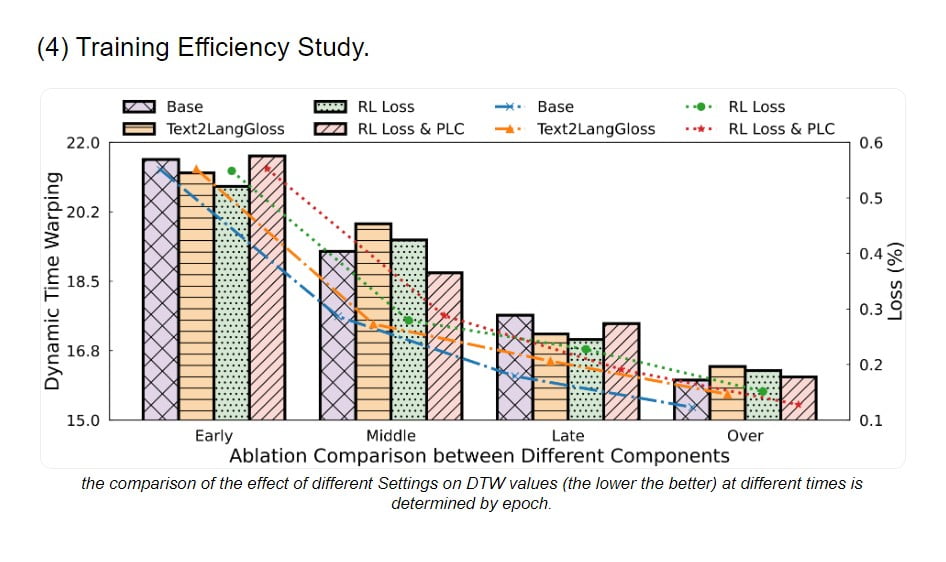

Ablation Studies: Unveiling the Drivers of SignLLM’s Success

To better understand the factors contributing to SignLLM’s exceptional performance, the research team has conducted a series of ablation studies. These in-depth analyses have shed light on the impact of various data augmentation techniques, loss functions, and architectural choices on the model’s overall effectiveness.

By systematically evaluating the performance of SignLLM under different settings, the researchers have been able to identify the key drivers of the model’s success. This knowledge not only informs future iterations of the SignLLM framework but also provides valuable insights for the broader sign language technology community.

Efficient Training: Optimizing the Learning Process

Recognizing the importance of training efficiency in the development of large-scale sign language production models, the SignLLM team has dedicated effort to optimizing the learning process. Through careful experimentation and analysis, they have identified strategies that can significantly accelerate the training of SignLLM, without compromising the quality of the generated output.

These efficiency-focused techniques, including the use of novel loss functions and specialized training modules, have enabled the researchers to train SignLLM more quickly and effectively, ultimately leading to faster development cycles and quicker deployment of the technology.

Bridging the Gap: SignLLM’s Potential Impact

The introduction of SignLLM represents a crucial step in bridging the gap between spoken language processing and sign language technology. By providing a comprehensive, multilingual solution for sign language production, this groundbreaking model has the potential to transform the way individuals with hearing impairments or deafness communicate and engage with the world around them.

Beyond its immediate impact on the lives of sign language users, SignLLM also holds promise for broader applications in areas such as education, entertainment, and accessibility. As the technology continues to evolve and expand, the researchers behind SignLLM are committed to exploring new frontiers and driving the field of sign language technology forward.

Ushering in a New Era of Sign Language Technology

The introduction of SignLLM marks a pivotal moment in the history of sign language technology. By leveraging the power of large language models, multilingual datasets, and advanced computational AI techniques, this pioneering framework has demonstrated the immense potential for sign language production to become a more accessible and integrated part of our digital landscape.

As the SignLLM project continues to evolve and expand, the researchers behind it remain steadfast in their commitment to driving innovation, fostering collaboration, and empowering individuals with hearing impairments or deafness to communicate and engage with the world more effectively. The future of sign language technology is bright, and SignLLM is leading the charge towards a more inclusive and accessible world.

Definitions

- SignLLM: A comprehensive multilingual sign language production model designed to generate sign language gestures from text prompts.

- American Sign Language (ASL): A complete, natural language used by the deaf community in the United States and parts of Canada.

- Prompt2Sign Dataset: A dataset comprising sign language data from multiple sources, optimized for training sign language generation models.

- Priority Learning Channel (PLC): A reinforcement learning-based module used to enhance the training process by prioritizing high-quality data sampling.

Frequently Asked Questions

- What is SignLLM? SignLLM is a state-of-the-art multilingual sign language production model that can generate sign language gestures from text prompts. It supports eight different sign languages, including American Sign Language (ASL).

- How does SignLLM utilize the Prompt2Sign dataset? The Prompt2Sign dataset is a foundational resource for SignLLM, providing diverse and high-quality sign language data. This dataset enables the model to perform effectively across multiple languages.

- What is the Priority Learning Channel (PLC) in SignLLM? The Priority Learning Channel (PLC) is a reinforcement learning module in SignLLM that improves the training process by autonomously sampling high-quality data. This enhances the model’s performance and training efficiency.

- How does SignLLM ensure the quality of generated sign language gestures? SignLLM incorporates a Text-to-Gloss framework and style transfer models, which help produce linguistically accurate and visually compelling sign language gestures, enhancing the overall quality of the output.

- What are the potential applications of SignLLM? SignLLM can be used in various fields such as education, entertainment, and accessibility. It aims to improve communication for individuals with hearing impairments or deafness by providing an advanced tool for sign language production.