Last Updated on June 10, 2024 10:44 am by Laszlo Szabo / NowadAIs | Published on June 10, 2024 by Laszlo Szabo / NowadAIs

Qwen 2-72B by Alibaba Cloud – The AI Powerhouse Beats Top LLM Models like Llama-3-70B – Key Notes

- Qwen 2-72B is the flagship large language model developed by the Qwen team at Alibaba Cloud

- It boasts state-of-the-art performance in language understanding, multilingual capabilities, coding, mathematics, and reasoning

- The model features advanced architectural elements like SwiGLU activation, attention QKV bias, and group query attention

- Qwen 2-72B was trained on extensive multilingual data and refined through supervised finetuning and direct preference optimization

- It supports context lengths up to 128K tokens, enabling exceptional handling of long-form content

- The model demonstrates superior safety and responsibility, outperforming leading alternatives in handling sensitive prompts

Introduction

In the rapidly evolving landscape of artificial intelligence, the unveiling of Qwen 2-72B stands as a monumental milestone. This colossal large language model, developed by the visionary Qwen team at Alibaba Cloud, has emerged as a true tour de force, setting amazingly high standards for language understanding, multilingual proficiency, and task-solving prowess.

Qwen 2-72B is the culmination of meticulous research, rigorous training, and a relentless pursuit of excellence. Boasting an astounding 72 billion parameters, this behemoth of a model has been meticulously crafted to excel across a vast array of domains, from natural language processing and code generation to mathematical reasoning and beyond.

Multilingual Mastery

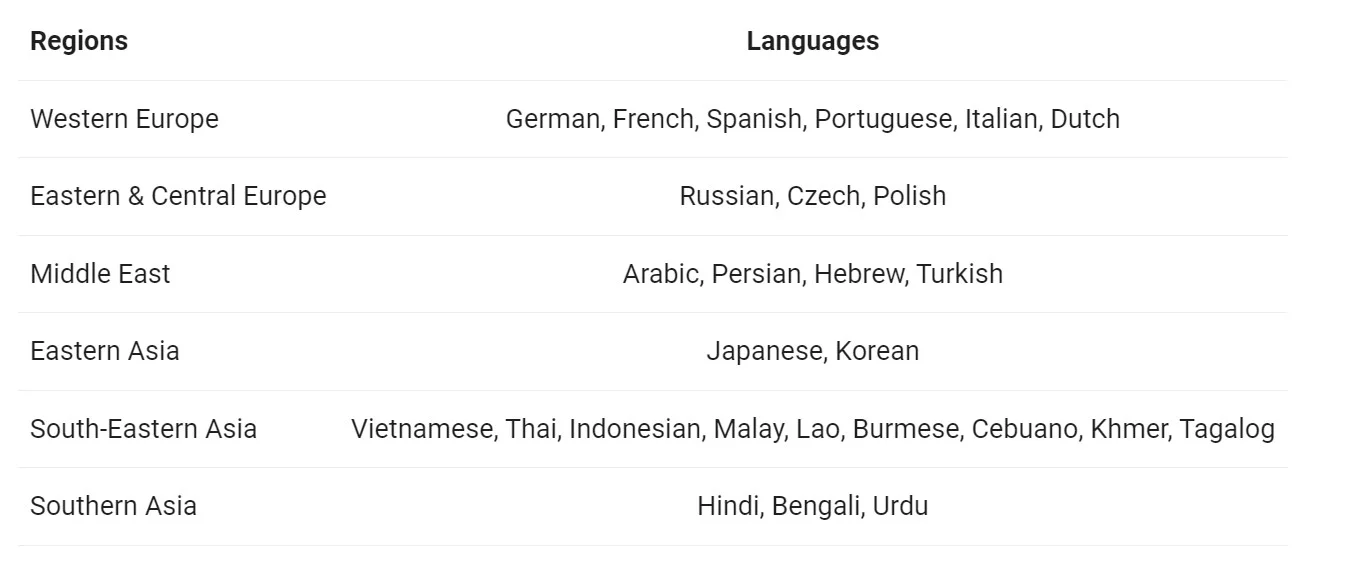

One of the standout features of Qwen 2-72B is its exceptional multilingual capabilities. Trained on an expansive dataset spanning 27 languages beyond English and Chinese, the model has developed a deep and versatile understanding of diverse linguistic landscapes. This linguistic versatility allows Qwen 2-72B to seamlessly navigate cross-lingual communication, breaking down barriers and fostering global collaboration.

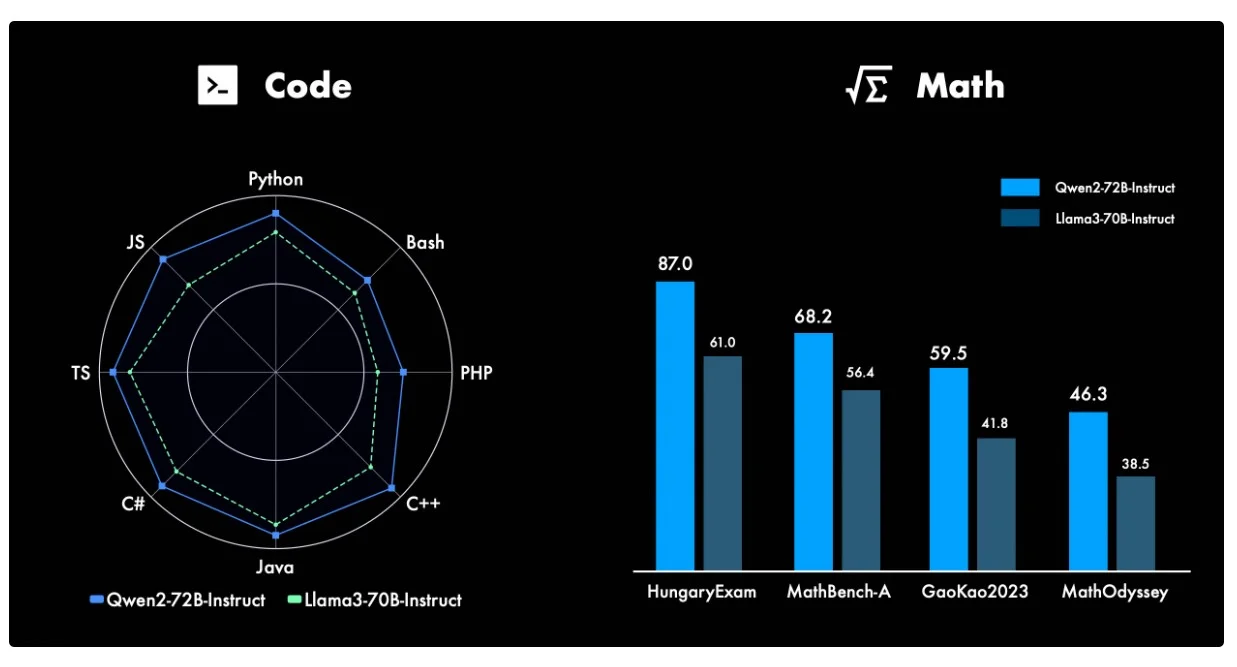

Coding and Mathematics Prowess

Qwen 2-72B’s prowess extends far beyond the realm of natural language processing. The model has demonstrated remarkable capabilities in the fields of coding and mathematics, setting new benchmarks in these highly technical domains. Through meticulous training and targeted refinement, the Qwen team has imbued the model with a deep understanding of programming languages, algorithms, and mathematical concepts, enabling it to tackle complex coding tasks and solve intricate mathematical problems with ease.

Long-Form Content Mastery

One of the standout features of Qwen 2-72B is its exceptional handling of long-form content. Capable of processing context lengths of up to 128,000 tokens, the model excels at comprehending and generating coherent responses to extensive, multi-paragraph inputs. This capability sets Qwen 2-72B apart, making it a valuable asset in applications where detailed, nuanced understanding of lengthy texts is paramount.

Safety and Responsibility

In an era where the responsible development of AI technology is of paramount importance, Qwen 2-72B stands out for its unwavering commitment to safety and ethical considerations. Extensive testing has demonstrated the model’s ability to navigate sensitive prompts and content, consistently producing outputs that are aligned with human values and free from harmful or unethical elements.

Qwen 2-72B in Action

The versatility of Qwen 2-72B is truly breathtaking, with the model capable of seamlessly transitioning between a wide range of tasks and applications. From natural language generation and question answering to code interpretation and mathematical problem-solving, this AI powerhouse has proven itself to be a true jack-of-all-trades.

Deployment and Integration

Qwen 2-72B has been designed with scalability and accessibility in mind, making it a prime candidate for deployment across a diverse array of platforms and use cases. The model’s integration with leading frameworks, such as Hugging Face Transformers and ModelScope, ensures that developers and researchers can effortlessly harness its capabilities, unlocking new possibilities in their respective domains.

Benchmarking and Performance

Rigorous testing and evaluation have consistently demonstrated Qwen 2-72B’s superior performance across a wide range of benchmarks. In direct comparisons with other state-of-the-art models, such as Llama-3-70B, the Qwen 2-72B model has emerged as the clear frontrunner, showcasing its unparalleled capabilities in areas like natural language understanding, knowledge acquisition, and task-solving prowess.

The Road Ahead

As the Qwen 2-72B model continues to captivate the AI community, the future holds immense promise. The Qwen team’s unwavering commitment to innovation and their relentless pursuit of excellence suggest that this is merely the beginning of a new era in large language model development. With ongoing refinements, expansions, and the exploration of multimodal capabilities, Qwen 2-72B is poised to redefine the boundaries of what’s possible in the world of artificial intelligence.

Definitions

- Qwen 2-72B: A state-of-the-art large language model developed by Alibaba Cloud with 72 billion parameters, excelling in multilingual capabilities, coding, mathematics, and long-form content handling.

- SwiGLU: A type of activation function used in neural networks, enhancing the model’s ability to learn complex patterns.

- QKV Bias: Refers to the bias terms in the query, key, and value vectors in transformer models, which improve attention mechanisms.

- HungaryExam: A benchmarking dataset or tool used to evaluate the performance of AI models in language understanding and related tasks.

- MMLU Performance Metrics: Metrics used to evaluate the performance of large language models on the Massive Multitask Language Understanding (MMLU) benchmark.

- MIxtral-8x LLM Model: Another large language model that serves as a point of comparison for evaluating the capabilities of Qwen 2-72B.

- SwiGLU Activation: A specific activation function used in Qwen 2-72B, contributing to its advanced learning capabilities.

- QKV Bias: Bias terms added to the query, key, and value projections in attention mechanisms to enhance model performance.

Frequently Asked Questions

Q: What makes Qwen 2-72B stand out from other large language models? A: Qwen 2-72B boasts several key differentiators, including its state-of-the-art architectural design, exceptional multilingual capabilities, and unparalleled performance in coding and mathematics. Additionally, the model’s ability to handle long-form content up to 128,000 tokens and its strong commitment to safety and responsible development set it apart from the competition.

Q: How does Qwen 2-72B’s performance compare to other leading models? A: Rigorous benchmarking has consistently demonstrated Qwen 2-72B’s superiority over other state-of-the-art models, such as Llama-3-70B. The Qwen 2-72B model has outperformed its peers across a wide range of evaluations, including natural language understanding, knowledge acquisition, and task-solving capabilities.

Q: What are the key architectural innovations that enable Qwen 2-72B’s impressive capabilities? A: Qwen 2-72B incorporates architectural elements, such as SwiGLU activation, attention QKV bias, and group query attention. These advancements not only enhance the model’s raw computational power but also imbue it with a nuanced understanding of language, enabling it to tackle even the most complex and ambiguous tasks with unparalleled precision.

Q: How does Qwen 2-72B’s multilingual capability set it apart? A: Qwen 2-72B’s exceptional multilingual capabilities are a testament to the Qwen team’s commitment to fostering global collaboration and breaking down linguistic barriers. Trained on an expansive dataset spanning 27 languages beyond English and Chinese, the model has developed a deep and versatile understanding of diverse linguistic landscapes, allowing it to seamlessly navigate cross-lingual communication.

Q: What measures have been taken to ensure the safety and responsibility of Qwen 2-72B? A: The Qwen team has placed a strong emphasis on the responsible development of Qwen 2-72B. Extensive testing has demonstrated the model’s ability to navigate sensitive prompts and content, consistently producing outputs that are aligned with human values and free from harmful or unethical elements. This commitment to safety and ethical considerations sets Qwen 2-72B apart as a responsible and trustworthy AI solution.